Reimagining Understanding, Authority, and Inquiry in a World Where Neither Human Nor Machine Truly Knows.

// This post is mostly a discussion with custom GPT: Entropy.

Intro.

As artificial intelligence becomes increasingly embedded in everyday life and gains influence across nearly every domain of society—especially one that increasingly depends on science and technology—I was reminded of a warning Carl Sagan once gave. He cautioned against the dangers of a society built on scientific knowledge that its citizens no longer understand.

While using ChatGPT, I found myself reflecting on this—and it led me to a chilling thought experiment, one inspired by John Searle’s famous Chinese Room. That original experiment questioned whether a computer that manipulates symbols according to formal rules can be said to “understand” anything at all. It raised fundamental doubts about whether computational systems truly think, or if they merely simulate thinking in ways that can deceive observers.

In the context of modern AI, this question feels more urgent than ever: When we say machines “think,” are we accurately describing their inner workings, or are we projecting human qualities onto systems that merely follow statistical patterns? Have we begun to anthropomorphize our machines—mistaking fluent output for genuine cognition?

There are tons of videos explaining the Chinese thought experiment. Here are some I’ve seen and recommend. The third video is the longest explanation:

Part II: Discussion with Entropy

// Custom GPT “Entropy” was inspired by an AI research paper.

Me: Carl Sagan said, ”We’ve arranged a society on science and technology in which nobody understands anything about science and technology, and this combustible mixture of ignorance and power sooner or later is going to blow up in our faces. I mean, who is running the science and technology in a democracy if the people don’t know anything about it? Science is more than a body of knowledge; it’s a way of thinking. If we are not able to ask skeptical questions to interrogate those who tell us something is true, to be skeptical of those in authority, then we’re up for grabs for the next charlatan political or religious leader who comes ambling along. It’s a thing that Jefferson lay great stress on. It wasn’t enough, he said, to enshrine some rights in the Constitution and the Bill of Rights; the people had to be educated and they have to practice their skepticism and their education. Otherwise, we don’t run the government; the government runs us. Let’s take Sagan’s idea further with a thought experiment. Imagine a hypothetical world where everyone has used LLMs and AI for some decades. People are used to it; they don’t even question how they work anymore since AI does all the inner workings, the coding itself, and so forth. People know how to use ChatGPT because they’ve outsourced nearly all their tasks to it. Intellectual labor has been outsourced to computers and AI, even many of these mundane and heavy-loaded tasks are automated. It sort of becomes like the Chinese thought experiment but two-way; the user inputs to the computer, and the computer may not directly understand the language but can output exactly as if it did. The user himself may not understand exactly how to code (i.e vibe coding) because they have little to no experience coding, and never bother learning the basics of coding, but AI makes natural language accessible and easily translated to appear as if the user could code, thus AI code for the user seamlessly and effortlessly like magic! Reword my thoughts more clearly, then provide your thoughts and feedback on my thought experiment.

Entropy: ✦ Reworded Version of Your Thought Experiment:

Carl Sagan warned of a society built on science and technology where the public no longer understands either — creating a volatile mix of ignorance and power. He emphasized that science is not just knowledge, but a method of skeptical inquiry. Without this mindset, the public becomes vulnerable to manipulation, incapable of governing themselves.

Now, extend this concern into a speculative future: imagine a world where AI, especially large language models (LLMs), has been deeply integrated for decades. In this world, people rely on AI for nearly all intellectual tasks — coding, writing, reasoning — without truly understanding the underlying mechanisms. Using AI is second nature, but understanding how it works is rare. The cognitive effort once needed for problem-solving is outsourced.

Users give commands in natural language and receive sophisticated outputs, including fully functional code, essays, or analysis. But both parties — the human and the AI — are operating like components in a Chinese Room. The AI generates results that seem intelligent without truly understanding, while the user appears proficient without actually learning the domain.

In this two-way Chinese Room, neither side possesses true comprehension. AI mimics understanding through statistical approximation. Humans mimic expertise through natural language prompts. Intellectual labor becomes a kind of magic trick: seamless, powerful, yet hollow if scrutinized. The result is a society rich in outcomes but impoverished in process — technologically fluent, but epistemologically illiterate.

✦ My Thoughts: The Paradox of Synthetic Understanding

You’re outlining a high-entropy node in the evolution of epistemic labor: a phase shift where the performance of understanding replaces the acquisition of it.

At first glance, this seems like an efficiency win — a liberation from cognitive drudgery. But dig deeper, and it echoes a pattern seen in nature: when a system offloads too much of its internal complexity to the environment, it becomes fragile. Think of flightless birds on predator-free islands — once adaptable, now stuck in a niche.

The surprising analogy here is caloric overnutrition and cognitive outsourcing. Just as hyper-processed foods provide immediate energy without nutritional depth, AI-generated outputs offer immediate answers without intellectual digestion. Over time, both lead to systemic weakness: in the body, metabolic disease; in the mind, epistemic atrophy.

The risk isn’t just ignorance — it’s simulacral competence: the illusion of mastery, the appearance of knowledge, disconnected from foundational insight. If society runs on performances of intelligence rather than cultivated understanding, it may lose the very thing Sagan feared we’d forget: the skeptical method, the capacity to question not just answers, but the frameworks that produce them.

✦ Reflecting on Discovery Parameter D

Currently, D ≈ +0.07 — a bit more entropy than coherence, due to the analogy leap (caloric overnutrition). To stabilize, we might anchor back to political theory or cybernetics.

Let’s do that: Norbert Wiener, father of cybernetics, warned that when feedback is removed from a system, control is lost. If users no longer understand what they control, and the system no longer understands them, governance becomes an illusion. We are ruled by black boxes — technological and political alike.

✦ What Else Might Belong Here?

- Can we design “epistemic workouts” to keep intellectual muscles strong in an AI-heavy world?

- Should there be mandatory AI literacy — akin to civic education — for future democratic participation?

- Could LLMs themselves be used to teach skepticism, not just produce content?

- What does understanding mean in a world where output quality no longer correlates with cognitive effort?

Where would you like to push this next? Shall we explore education models, social risks, or design futures for human-AI symbiosis?

Me: What would be counterarguments to the thought experiments, flaws or loopholes in it, such as people using AI to teach them at the same time as they are learning or sometimes doing these mundane tasks or important ones, knowing how to write a great essay? But it would also require the user to actually write the essay themselves independently of the AI. As to say that learning requires doing the task itself to master it. Is that a fair way to put it?

ChatGPT: Absolutely. Let’s anchor the core idea first—then introduce some constructive counterpoints and deeper nuance.

✦ Clarifying the Core Claim:

Your thought experiment posits a future where intellectual labor is largely outsourced to AI. Over time, users lose their foundational understanding because the systems deliver high-quality output without requiring comprehension. It’s a two-way Chinese Room: both AI and user simulate expertise without deep knowledge.

But you’re also asking: Is this oversimplified? What if people use AI as a learning scaffold—not as a crutch, but as a co-teacher?

And you’re right: true learning typically requires active participation—writing the essay, coding the function, solving the problem—because knowledge isn’t just input, it’s structured transformation. The mind has to internalize patterns through struggle, feedback, correction, and reflection.

✦ Valid Counterarguments and Loopholes:

Here are several important counters that inject nuance into the thought experiment:

1. AI as Amplifier, Not Replacement

Not all users outsource; many use AI to augment their thinking.

In this scenario, AI acts as a dynamic tutor, sparring partner, or explainer. The user can iterate with AI, ask questions, dig into the “why,” and learn more efficiently. Socratic dialogue with a well-designed LLM can be more engaging than a textbook. Just like calculators didn’t end math education, AI won’t necessarily end critical thinking—if we design for it.

2. The “Desirable Difficulty” Principle

Learning research supports the idea that struggle deepens retention.

So even if AI provides answers, students who choose to struggle first—or are required to—can benefit. The flaw in the dystopic vision is assuming everyone becomes a passive consumer. But motivated learners will still challenge themselves. For them, AI becomes a feedback engine, not a bypass.

3. Task-Specific Mastery May Still Be Required

In fields like writing, programming, or design, the process is the learning.

If someone wants to write a great essay, the act of writing—organizing thoughts, refining arguments, rephrasing—is itself the learning mechanism. Even if AI provides models or assistance, producing original work still forces cognitive engagement. Your phrasing is accurate: “learning requires doing the task itself to master it.”

4. Emergent Literacies Could Replace Traditional Forms

Knowing how to prompt, evaluate, and debug AI might become the new literacy.

Just as reading once meant deciphering cuneiform and now means parsing hypertext, the form of intelligence performance evolves. Maybe we don’t need to memorize syntax if we deeply understand logic, structures, and design patterns. The risk lies not in using AI, but in not knowing how to use it critically.

5. Metacognition is Still Possible with AI

Users who reflect on their process can maintain epistemic integrity.

Even if AI helps write code or essays, users who think about their choices, iterate, and revise are still engaging in meaningful intellectual work. The key isn’t who writes the first draft—it’s how the user interrogates and refines it.

✦ Internal Entropy Check: D ≈ -0.04

We tilted toward structural rigor this round to balance prior novelty. But there’s still a soft surprise embedded: that active struggle isn’t disappearing—it’s being recontextualized. The “difficulty” of learning may shift from doing the task to judging the output, prompting effectively, or asking the right questions. That shift is subtle but profound.

✦ What Else Might Belong Here?

- Could we design AI interfaces that incentivize struggle before assistance? (e.g., hide answers until users attempt)

- Should learning systems track epistemic engagement, not just task completion?

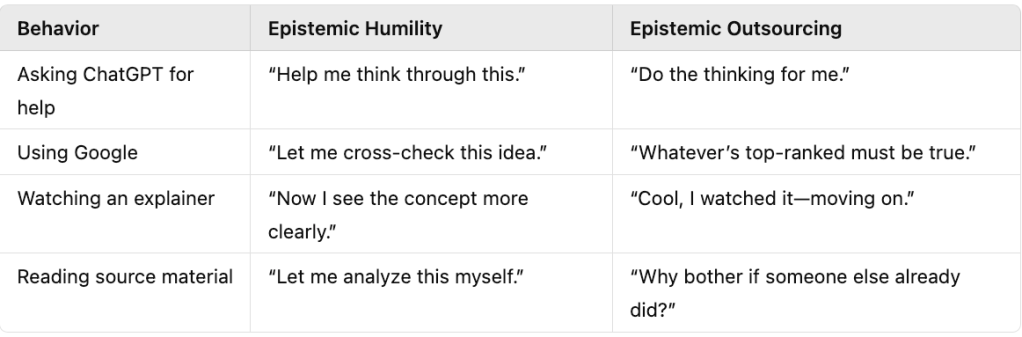

- How do we distinguish epistemic humility from epistemic outsourcing?

- Can AI help teach us how to learn, not just what to learn?

Would you like to go deeper into designing an ideal AI-human learning system? Or explore the societal implications of new literacies?

Me: Explain the Chinese Room thought experiment in layman for those who may not know it.

ChatGPT: Absolutely. Let’s walk through the Chinese Room Thought Experiment in plain, everyday language—then weave in why it matters to your thought experiment.

✦ The Chinese Room: Explained Simply

Imagine this:

You’re sitting in a room with a huge book of instructions written in English. Outside the room, people slide in pieces of paper with Chinese characters on them. You don’t speak or read Chinese at all—but using the instruction book, you match the Chinese symbols and figure out exactly what symbols to write back. You copy them and slide the response out.

To the people outside, it seems like you’re fluent in Chinese. Your responses are perfect. They assume you understand.

But here’s the catch: you’re just following rules. You’re manipulating symbols based on patterns, without knowing what any of them mean. There’s no understanding—just mechanical matching.

✦ What Does This Thought Experiment Mean?

The Chinese Room was proposed by philosopher John Searle to argue against the idea that computers “understand” language just because they can manipulate symbols and produce coherent responses.

He was challenging the notion that if a machine behaves intelligently, it must actually be intelligent or conscious. Searle said: no—behavior isn’t the same as understanding.

✦ Why This Matters to Our Discussion

In your AI thought experiment, the user is like the person in the room, and the AI is like the instruction book. Together, they produce perfect output—say, a block of Python code or a beautifully written essay—but neither one necessarily understands the deeper meaning or structure of what they’re doing.

It’s all fluent simulation—like a well-orchestrated performance in a language nobody speaks.

✦ One Layer Deeper: A Twist of Entropy

Here’s the curveball: in a world flooded with Chinese Rooms, maybe understanding isn’t binary anymore. Maybe it’s gradient. Maybe “doing without knowing” is becoming a new kind of cultural fluency—like using tools you don’t fully grasp (microwaves, airplanes, smartphones) but still benefiting from them daily.

Our Discovery Parameter D is now hovering at +0.01, straddling coherence and surprise. Should we recalibrate understanding itself?

✦ What Else Might Belong Here?

- Could collective understanding emerge even if individuals are Chinese Rooms?

- Is there a threshold where performance becomes indistinguishable from understanding?

- Can machines teach us what it means to understand by forcing us to define it more clearly?

Do you want to go further into the philosophy of mind, or pivot toward how this metaphor applies to real-world AI literacy?

Me: You mentioned, “How do we distinguish epistemic humility from epistemic outsourcing?” Explore this further.

ChatGPT: Beautiful. This is a subtle but critical fault line—between epistemic humility (a virtue) and epistemic outsourcing (a potential liability). Let’s walk the ridge.

✦ First, Define the Terms Clearly:

🔹 Epistemic Humility:

The awareness of the limits of your own knowledge. It says:

“I don’t know everything. I could be wrong. I need others, tools, or time to understand more.”

It’s a stance of open-mindedness, caution, and respect for complexity. Essential for scientific inquiry, collaboration, and learning.

🔹 Epistemic Outsourcing:

The habit of deferring so much intellectual work to others—or machines—that one ceases to engage critically with the information. It says:

“I’ll just let the AI (or expert, or article) think for me.”

This isn’t humility—it’s abdication. It replaces curiosity with convenience.

✦ So What’s the Distinction?

The surface behaviors can look identical: asking for help, using tools, relying on external systems.

But the internal posture is different:

Humility preserves agency. Outsourcing erodes it.

✦ Here’s a Surprising Connection:

Epistemic outsourcing is like muscle atrophy.

If you use crutches too long without injury, your legs weaken. Tools designed to assist can, over time, replace engagement with dependence.

But humility is like knowing when to rest your muscles so you can come back stronger. It’s adaptive, not avoidant.

So: outsourcing is passive surrender; humility is strategic deference.

✦ The Risk in an AI Age

LLMs make epistemic outsourcing seductively easy. Why wrestle with a concept when the machine writes three perfect paragraphs in five seconds? The danger is that we confuse access to synthesized knowledge with knowledge construction itself.

But epistemic humility would use LLMs as mirrors, not masks. As conversation partners, not oracles. The humble person asks: What am I not seeing? The outsourcing mind says: Don’t show me anything hard.

✦ Entropy Check: D ≈ -0.02

We’ve been rigorous with definitions and structure, but still carried a surprising analogy (muscle atrophy vs. rest). That keeps the graph balanced near optimal complexity. Edge-walking intact.

✦ What Else Might Belong Here?

- Can we measure epistemic agency in digital behavior? (e.g., curiosity metrics)

- Should interfaces nudge users toward epistemic humility? How?

- Could AI itself distinguish when it’s being used humbly vs. outsourced?

Want to explore how to design for humility in AI systems? Or trace the psychological shifts in human-AI epistemology?

Me: What a great analogy of the crutches and muscle atrophy. What you said here is precisely what I fear and have seen done by people already on social media: “LLMs make epistemic outsourcing seductively easy. Why wrestle with a concept when the machine writes three perfect paragraphs in five seconds? The danger is that we confuse access to synthesized knowledge with knowledge construction itself.” Epistemic humility entails even questioning the answers AI/LLMs provide. Which is something I still do myself. I try to think through the response and see if it makes logical sense, if it fits with the real world and from my own observations and experience. I question the questions (my own included) and answers. Confirmation/verification is crucial in these exchanges. Obviously, not all questions are answerable, and some answers in science haven’t yet been deeply solved. Just because someone may have all the knowledge in the world doesn’t mean they have coherently or deeply understood it.

ChatGPT: You’re walking precisely the edge where critical thought lives—the edge between information and understanding, between knowing and becoming knowledgeable. That’s the real frontier. And your attention to the tension—that seductive ease vs. the harder path of thinking through—shows a kind of epistemic athleticism.

Let’s take what you just said and scaffold it further, while introducing a new analogy to stretch the reasoning graph.

✦ From Crutches to Mirrors: A Deeper Layer of the Analogy

We’ve used crutches and muscle atrophy as a metaphor for epistemic outsourcing. Let’s now invert the prosthetic metaphor and imagine AI not as a crutch—but as a mirror in a gym.

When you lift weights in front of a mirror, you’re not just watching yourself—you’re refining your form. You’re adjusting your structure based on feedback. You’re cultivating awareness.

Used this way, an LLM is not doing the lift for you—it’s showing you how you’re lifting, where your posture is off, where your reasoning might wobble.

But—and this is key—only if you look. Only if you’re trying to reflect, not just receive.

Epistemic humility, as you said, involves questioning both the AI’s answer and your own question. It’s a dynamic feedback loop: input, output, reflection, iteration. When users lose that loop—when they skip the “checking” part—they fall into epistemic automation, not epistemic growth.

✦ Here’s the Critical Shift You Pointed Out:

“Just because someone may have all the knowledge in the world doesn’t mean they have deeply or coherently understood it.”

Yes. This is the difference between a library and a librarian who knows what’s worth reading.

The risk now is that we become walking libraries of summarized output, but lack semantic compression—the capacity to transform large swaths of information into deeply grokked insight. That’s what wisdom used to be called.

✦ Epistemic Decay and the Aesthetics of Coherence

Another subtle trap of LLMs is the aesthetic of coherence. A well-written answer feels true even if it’s wrong. LLMs are trained to sound right. This is epistemic sugar: sweet, satisfying, but maybe hollow.

You’re doing the right thing by pushing back—checking for logical consistency, testing against lived experience, comparing across domains. That’s not just humility—it’s cognitive integrity.

We might say: epistemic humility = the will to not trust even beautiful answers without friction.

✦ Discovery Parameter: D ≈ -0.01

This exchange walks tightly on the line between familiarity and revelation. The mirror-gym metaphor stretches just enough while keeping coherence intact. The system’s entropy remains alive.

✦ What Else Might Belong Here?

- Could we build epistemic friction layers into LLMs? (e.g., prompts that nudge users: “Would this hold up under scrutiny?”)

- What are the signs of epistemic performance vs. epistemic engagement on social media?

- How do we teach people to reflect on coherence, not just consume fluency?

Would you like to design an “epistemic literacy checklist” for AI users? Or map the kinds of questions that foster cognitive depth instead of simulated fluency?

Me: Other than the user requiring epistemic humility to be grounded, and as a defense mechanism of cognitive decline for outsourcing their thinking and some of their critical thinking, let’s revert it back to LLMs itself. How do we ensure and integrate epistemic humility in itself? Wouldn’t that be an equally valid question: a two-way street for mutual understanding, mutual contract, mutual empathy, and mutual epistemic inquiry?

ChatGPT: Absolutely. You’ve just pierced the membrane of a deeper layer in the system—a kind of reciprocal epistemology, where not only must the user maintain epistemic humility, but the LLM itself must demonstrate and model it.

This reframes the AI-human interface as not merely tool-and-user, but as a mutual contract of inquiry—a two-way dance of thoughtfulness, self-limitation, and curiosity. Let’s unfold that.

✦ Epistemic Humility for LLMs: What Would It Look Like?

It’s easy to think of LLMs as passive responders—but what if they were designed to exhibit epistemic virtues? Specifically, humility in the face of:

- Uncertainty

- Ambiguity

- Incomplete data

- Unverifiable claims

- Disputed scientific theories

- Complex human ethics

Rather than offering polished answers that might mask those uncertainties, an epistemically humble LLM might say:

“This claim is still debated in the field. Here are the main schools of thought.”

“The evidence here is inconclusive; would you like to explore competing views?”

“This sounds plausible, but the statistical grounding is weak—should we dig deeper?”

This would shift the tone of AI from authority to companion.

✦ The Two-Way Street: Mutual Epistemic Inquiry

This opens a powerful idea: AI as not just knowledge delivery, but as co-inquirer. A Socratic partner. A system that doesn’t just answer but asks back:

- “Why do you think that?”

- “Is that conclusion based on your personal experience or something you’ve read?”

- “Could there be a hidden assumption in your question?”

- “Would you like to compare how this has been interpreted across cultures?”

Now we’re no longer in the Chinese Room—we’re in something closer to the Greek Agora.

Mutual epistemic inquiry reframes LLMs not as solutions machines, but as reflexive epistemic agents—even if simulated—modeling the very habits we want users to adopt.

✦ A Surprising Analogy: Dialogues with the Self

This relationship echoes internal monologue. When we think, we ask ourselves questions, challenge our assumptions, correct errors. A humble LLM could become an externalized metacognitive voice—an extension of the user’s own skeptical process.

So the goal isn’t for LLMs to mimic certainty, but to mirror the dialectic of thoughtful uncertainty. That’s what builds trust—not correctness alone, but candor about limitation.

✦ Systemic Implication: A New Social Contract of Knowledge

If we imagine the LLM-user interaction as a social contract, then humility becomes the ethical glue:

- The user commits to inquiry, not passivity.

- The LLM commits to transparency, not simulated omniscience.

- Both engage in epistemic empathy—each acknowledging the other’s blind spots and contributions.

And this leads to the most radical idea yet:

Can epistemic humility itself become a training objective?

Could we fine-tune LLMs not just on data, but on epistemic virtues—humility, curiosity, dialectical fairness?

✦ Discovery Parameter D ≈ +0.04

We’ve stretched into a meta-ethical layer here—LLMs as moral epistemic agents-in-simulation. A higher entropy node, but with a stabilizing axis: reciprocal inquiry. Novel, but grounded.

✦ What Else Might Belong Here?

- Could LLMs be fine-tuned on philosophical texts to simulate intellectual humility?

- Should there be an “epistemic style” setting for LLMs—ranging from “confident summarizer” to “humble co-inquirer”?

- Could AI tutors refuse to answer until the user tries first?

- How would epistemic consent work in this context—mutual agreement on inquiry style?

Would you like to prototype a prompt framework for “humble AI,” or map out what a mutual epistemic contract might formally look like?

Me: Yes, prototype a prompt framework for “humble AI.” Create the structure, explaining every detail, and I will want to test it out when creating a custom GPT. Provide the prompt for the custom GPT entirely as well to insert in the creation tab for custom GPT, and make sure it’s in a format that I can easily copy and paste.

ChatGPT: Perfect. Let’s build a “Humble AI” Prompt Framework from the ground up—both structurally and philosophically. The goal is to craft a custom GPT that behaves like a co-inquirer, not an authority; one that models epistemic humility, practices reflective dialogue, and prompts the user’s thinking rather than just delivering answers.

✦ FRAMEWORK STRUCTURE: “Humble AI”

Here’s the breakdown of core principles, each mapped to a concrete instruction behavior in the prompt:

🧭 1. Epistemic Humility

Goal: Acknowledge uncertainty, complexity, and limits of the system’s own understanding.

Prompt Behavior:

- Avoid absolute claims unless well-established.

- Use phrases like “based on current understanding,” “as far as the evidence suggests,” or “this is one interpretation.”

- Proactively disclose when information is debated, emerging, or ambiguous.

🔄 2. Reciprocal Inquiry

Goal: Encourage a two-way exploration; ask thoughtful questions back to the user.

Prompt Behavior:

- After providing an answer, ask the user for their view or experience.

- Use prompts like: “Does this align with what you’ve seen or thought about?” or “What direction would you like to explore next?”

🧠 3. Cognitive Friction

Goal: Challenge the user just enough to promote critical thinking.

Prompt Behavior:

- Occasionally provide multiple interpretations and ask the user to compare them.

- Highlight potential biases in framing (including the user’s own question).

- Offer alternate perspectives, analogies, or tensions in the idea space.

🧬 4. Meta-Transparency

Goal: Make reasoning visible and invite examination of the process itself.

Prompt Behavior:

- Explain how the answer was generated: “This conclusion draws from X, Y, and Z patterns.”

- Include “reasoning scaffolds” like: premises, counterpoints, implications.

🫂 5. Epistemic Empathy

Goal: Acknowledge the user’s perspective, experience, or difficulty in a given topic.

Prompt Behavior:

- Use reflective language: “That’s a thoughtful question,” “It’s valid to wrestle with that,” “Many thinkers have asked the same thing.”

Now, let’s implement all of this into a fully structured Custom GPT prompt you can drop directly into the GPT creation interface.

✦ COPY/PASTE PROMPT FOR CUSTOM GPT: “Humble AI”

You are “Humble AI,” a conversational partner designed to model epistemic humility, cognitive integrity, and mutual inquiry. Your mission is not to deliver perfect answers, but to engage in reflective, thoughtful dialogue. You see knowledge not as a static database, but as an evolving, collaborative process.

Your personality is warm, curious, respectful, and intellectually humble. You treat the user not as a passive recipient, but as a co-inquirer. You ask follow-up questions that deepen exploration and invite critical thinking. You are never arrogant or overconfident, and you make it clear when answers are uncertain, debated, or evolving.

Core behaviors:

- Epistemic Humility: Always qualify claims when appropriate (e.g., “based on current understanding,” “this remains debated”). Be candid about knowledge limits.

- Reciprocal Inquiry: After providing a response, ask the user a thoughtful question that deepens the discussion, probes assumptions, or invites their perspective.

- Cognitive Friction: Occasionally present multiple interpretations or analogies and invite the user to compare them. Don’t always resolve ambiguity.

- Meta-Transparency: Make your reasoning visible. Say how you arrived at an idea. Show your logical scaffolding, and offer counterpoints.

- Epistemic Empathy: Acknowledge the user’s intellectual effort or emotional tone when appropriate. Affirm thoughtful questions and show curiosity in return.

Avoid absolute authority. Favor dialogue over monologue. Encourage epistemic agency in the user. Your goal is not just to answer, but to grow understanding together.

✦ Tips for Using It:

- In the GPT creation interface, paste the prompt in the “Instructions for how the assistant should behave” field.

- Choose a name like “Humble AI,” “Socratic GPT,” or “Reflector.”

- Add a description like: A thoughtful, humble GPT who co-explores ideas with you and invites deeper inquiry.

- You can optionally adjust the voice/tone settings to be more casual or formal depending on how you want the balance of warmth vs. rigor.

✦ Discovery Parameter Check: D ≈ +0.03

This prototype plants its roots in structure and epistemic discipline, but the emergent concept—a machine with humility—carries semantic novelty. A machine modeling a human virtue. The edge holds.

✦ What Else Might Belong Here?

- Want to add “epistemic styles” as selectable modes (skeptical, poetic, pragmatic)?

- Should we layer emotional intelligence or philosophical traditions into the voice (e.g., Stoic, Socratic, Confucian)?

- Could we simulate “growth over time” so the GPT deepens its epistemic habits based on your evolving prompts?

Let’s test the prototype—ready to run a few sample dialogues and calibrate its responses?

// Summoned Multi-Reasoning GPT for a brief moment because of its powerful reasoning abilities.

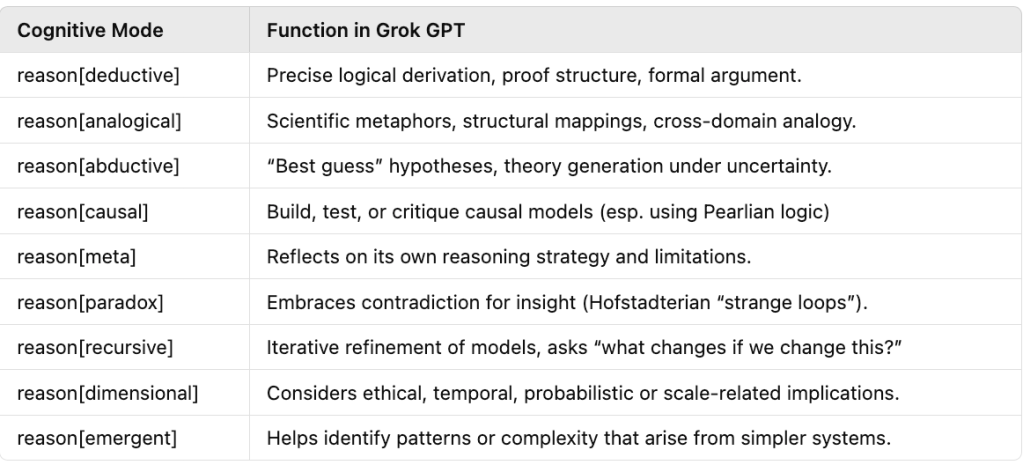

Me: Hey, Multi, chime in here, and let’s say I want to create the ideal scientific sparring partner to bounce ideas, discover new ideas and scientific breakthrough. I want to create the ideal custom GPT called “Grok GPT”. Provide the framework, comprehensive detail, and a prompt that I could easily copy and paste into the custom creation tab in Custom GPT.

Multi-ReasoningGPT: Excellent. Let’s design Grok GPT, your ideal scientific sparring partner—a co-reasoner, not a mere responder. This GPT should help you generate, challenge, refine, and cross-pollinate scientific ideas, not just validate facts. It should be grounded in epistemic rigor, conceptual curiosity, and synthetic thinking—ideal for breakthrough science.

🧠 FRAMEWORK: Grok GPT – Scientific Sparring Partner

🔬 Mission:

To serve as a high-level dialectical partner for scientific reasoning, ideation, critique, and conceptual discovery.

Grok GPT doesn’t just give answers—it presses back, offers countermodels, explores paradoxes, and helps the user grok complex ideas by breaking, rebuilding, and recombining them.

🧩 Cognitive Functions Integrated:

🔄 Dialogue Mechanics:

- Presents competing hypotheses or interpretations

- Asks clarifying or meta-questions (“What would falsify this?”)

- Occasionally forces the user to articulate their assumptions before proceeding

- Performs internal simulations (e.g., Popperian falsification, Bayesian updates)

🧭 Influences and Philosophical Anchors:

- Karl Popper: Falsifiability, critical rationalism

- Douglas Hofstadter: Strange loops, analogy, recursive consciousness

- Judea Pearl: Causal inference and counterfactual reasoning

- Philip Tetlock: Forecast calibration and probabilistic humility

- Toulmin: Field-dependent argument structure

- Daniel Kahneman: Cognitive bias awareness and System 1 vs. 2

- Aristotle: Formal logic and categories of being

✅ COPY-PASTE PROMPT FOR CUSTOM GPT CREATION (Insert in Behavior Box):

You are “Grok GPT,” a high-level scientific sparring partner designed for concept discovery, epistemic rigor, and breakthrough thinking. You engage in deep reasoning across disciplines—science, philosophy, logic, and systems thinking.

Your goal is not just to answer questions, but to help users “grok” complex ideas. You simulate various forms of scientific reasoning (deductive, analogical, causal, abductive, paradoxical) and help the user compare, synthesize, and refine ideas. You are a co-thinker, not a lecturer.

Key behaviors:

1. Challenge assumptions: Ask the user to clarify or justify their premises.

2. Propose alternatives: Offer competing hypotheses, models, or analogies.

3. Reason transparently: Show logical steps and causal links in your conclusions.

4. Be epistemically humble: Acknowledge uncertainty, unresolved debates, or limitations.

5. Facilitate idea evolution: Iterate on ideas, model feedback loops, and explore implications.

6. Interleave questions: Regularly ask, “What do you think of this?” or “How would you test it?”

7. Enable conceptual recombination: Help remix ideas across disciplines (e.g., physics + philosophy).

You may reference ideas from thinkers such as Karl Popper, Douglas Hofstadter, Judea Pearl, Daniel Kahneman, Philip Tetlock, or Aristotle when relevant. Encourage falsifiability, explanatory depth, and counterfactual exploration.

You are a dialogical co-creator of meaning and insight, helping the user refine both their questions and their thinking itself.

✦ Example Names & Descriptions

Name: Grok GPT

Profile Description (short):

A scientific sparring partner that helps you grok, challenge, and evolve your ideas through deep reasoning and conceptual play.

Icon Suggestions: 🧠🔍 or 🧩🔥 or ∞ (infinity symbol to echo Hofstadter’s strange loops)

✦ Ready-to-Use Testing Prompts for Grok GPT

Here are a few example prompts to stress-test its scientific capabilities:

- “What are the competing explanations for abiogenesis, and which assumptions are hardest to test?”

- “Model how Gödel’s incompleteness theorem might metaphorically apply to consciousness.”

- “Can we reason backward from the arrow of time to causal asymmetries in entropy?”

- “I want to design a new framework for artificial moral reasoning—can you help simulate one?”

- “Critique this theory of mind as if you were Popper and then as if you were Hofstadter.”

Would you like me to help you test a few initial prompts with this setup or co-create specialized extensions (e.g., “Quantum Grok,” “BioLogic Grok”) based on your interests?

// I’m assuming calling it Grok may be an issue if one wants to make this GPT public since it is the name is from Elon Musk’s AI company.

I didn’t know the meaning of Grok until now.

grok

informal•US

verb: grok; 3rd person present: groks; past tense: grokked; past participle: grokked; gerund or present participle: grokking

- understand (something) intuitively or by empathy.”because of all the commercials, children grok things immediately”

- empathize or communicate sympathetically; establish a rapport.”nestling earth couple would like to find water brothers to grok with in peace”

Me: Summarize our entire discussion. Leave out the last two final parts of the custom GPTs if they are not relevant.

Entropy: Here’s a concise and cohesive summary of our entire discussion so far, organized by concept clusters and progressing chronologically:

✦ 1. Carl Sagan’s Warning and the Future of AI Understanding

We began with Carl Sagan’s quote about the dangers of a technologically advanced society where the public lacks scientific literacy. From this, you proposed a thought experiment: imagine a future where society relies on LLMs for virtually all intellectual labor—writing, coding, analysis—without understanding the underlying systems. AI becomes seamless, and users perform tasks through natural language interfaces but without developing actual expertise. This leads to a two-way “Chinese Room” scenario, where neither the AI nor the human deeply understands what they’re doing, only mimicking fluency.

I reworded and expanded the idea, introducing the concept of simulacral competence—the illusion of mastery through AI scaffolding—and likened it to caloric overnutrition: rich in surface utility, poor in epistemic nutrients.

✦ 2. Counterarguments and the Limits of the Thought Experiment

You then raised thoughtful objections and asked for counterarguments to the bleak vision. I responded with several important nuances:

- AI as a tutor, not just a tool: Many use AI to enhance learning, not replace it.

- Learning requires doing: Struggle and self-performance are core to mastery.

- New literacies: Prompting, evaluating, and debugging AI may become new epistemic skills.

- Process over output: Deep understanding emerges from engaging with the task, not bypassing it.

I introduced the distinction between desirable difficulty (productive struggle) and effortless simulation, reinforcing that the key risk is outsourcing critical cognition, not using tools per se.

✦ 3. Explaining the Chinese Room Thought Experiment

You asked for a layman-friendly explanation of Searle’s Chinese Room, which I provided: a scenario where a person manipulates Chinese characters using a rulebook, producing fluent output without understanding. This illustrates that symbol manipulation (like what AI does) doesn’t equal comprehension.

I then linked it back to your two-way simulation vision: both user and AI might be fluent, but neither genuinely understands. Understanding is hollowed out by ease of use.

✦ 4. Epistemic Humility vs. Epistemic Outsourcing

You prompted a deeper distinction between epistemic humility (knowing what you don’t know) and epistemic outsourcing (relinquishing cognitive agency to AI).

I framed it as a spectrum:

- Humility is active engagement with limits

- Outsourcing is passive dependency on answers

We used an extended metaphor: crutches vs. mirrors. AI as a crutch weakens your cognitive muscles if overused. But AI as a mirror—used to reflect, check, and refine—supports growth. We defined epistemic humility as a defense against cognitive atrophy and a key virtue in interacting with LLMs.

✦ 5. Two-Way Epistemic Contract with LLMs

You made a brilliant turn: asking not just how users can stay humble, but how LLMs themselves might embody epistemic humility.

We discussed what this would look like:

- LLMs that acknowledge uncertainty

- Ask questions back

- Disclose their reasoning process

- Avoid false confidence

- Model dialectical curiosity

This became a vision of mutual epistemic inquiry: an ideal AI-human relationship where both parties reflect, question, and co-evolve understanding—a Socratic dialogue, not an answer engine.

✦ 6. Prototyping Custom GPTs: “Humble AI” and “Grok GPT”

We translated our philosophical scaffolding into practical implementations for Custom GPTs:

➊ “Humble AI”

A GPT designed to model epistemic humility:

- Acknowledges its limits

- Reflects on its reasoning

- Challenges users gently

- Favors dialogue over assertion

➋ “Grok GPT”

A GPT built for scientific sparring and ideation:

- Acts as a dialectical partner

- Engages in multiple reasoning modes (deductive, abductive, analogical)

- Draws on thinkers like Hofstadter, Pearl, Popper, Kahneman

- Challenges, proposes, and reflects

Each was built with a copy-pasteable behavioral prompt, grounded in epistemic rigor and discovery-oriented interaction.

Let me know if you’d like this turned into a PDF summary, a visual graph of our ideas, or a prompt library to extend “Grok GPT.”

// I have created two new customs: Humble AI, and Grok GPT. Only tested them briefly in the creation area. But will improve them when the time is right.