I was trying to figure out which model of ChatGPT was superior in terms of intelligence to make sure I’m not wasting my time or underutilizing ChatGPT and that’s how the conversation continued.

// ChatGPT 4o

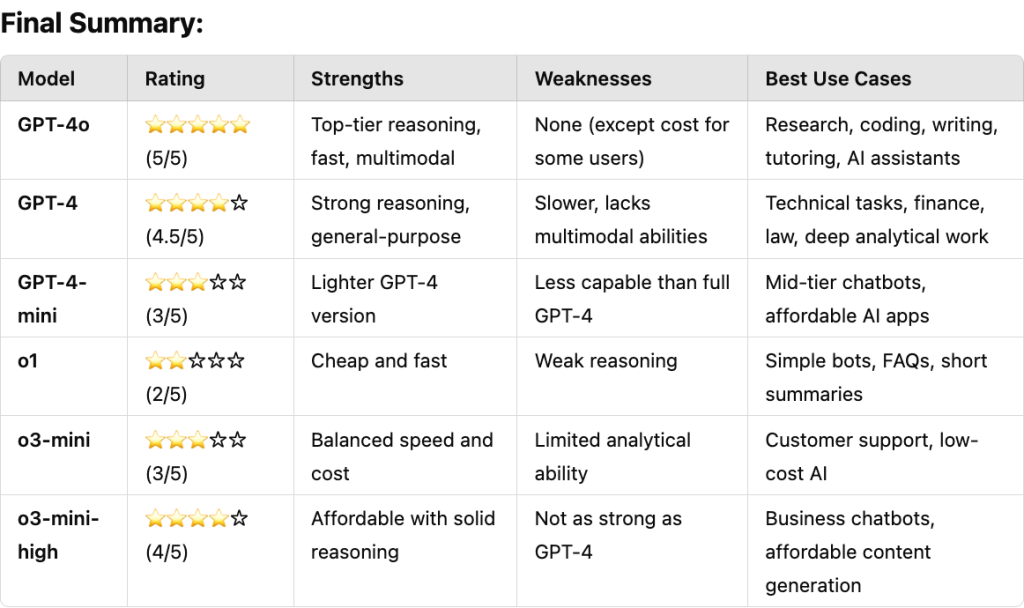

Me: Rate all these models 1-5 stars and reasons why and best use cases: GPT 4o, o1, o3-mini, o3-mini-high, GPT4-mini, and GPT4.

ChatGPT: Here’s a breakdown of each model with a rating (1–5 stars), reasons for the rating, and their best use cases.

1. GPT-4o ★★★★★ (5/5)

Reasons for the Rating:

✅ Fast and highly capable – A significant upgrade over GPT-4, offering superior reasoning, memory, and multimodal capabilities.

✅ Cost-efficient compared to GPT-4 Turbo – More powerful than GPT-4 Turbo while being efficient.

✅ Stronger contextual understanding – Handles complex prompts better than previous versions.

✅ Multimodal abilities – Stronger image, audio, and text generation capabilities than previous models.

✅ Improved real-time interactivity – Works well in dynamic or conversational applications.

Best Use Cases:

- High-level reasoning tasks (e.g., academic research, deep technical discussions, complex code generation)

- Creative writing and brainstorming

- Customer support AI with strong comprehension

- Advanced tutoring for students

- Any situation where multimodal capabilities (text + image + potential audio in the future) are needed

2. GPT-4 ★★★★☆ (4.5/5)

Reasons for the Rating:

✅ Still one of the strongest general-purpose AI models – Performs well across a wide range of tasks.

✅ Superior reasoning and understanding compared to 3.5 models

✅ Great for technical and complex tasks

⛔ Slower and more expensive compared to GPT-4o

⛔ Lacks real-time multimodal capabilities

Best Use Cases:

- Deep analytical reasoning (e.g., law, philosophy, finance)

- Technical fields that require precision (e.g., engineering, AI research)

- High-stakes professional writing

- Coding assistance (for non-real-time tasks)

- When you need a powerful model but don’t yet have access to GPT-4o

3. GPT-4-mini (Assuming this is a model in OpenAI’s internal lineup, though it isn’t publicly known) ★★★☆☆ (3/5)

Reasons for the Rating:

✅ Likely a lighter-weight version of GPT-4 – Balanced between cost and efficiency.

✅ Would be useful for applications that don’t need full GPT-4 power

⛔ Wouldn’t match full GPT-4 reasoning power

⛔ Would likely have some cutbacks in contextual depth

Best Use Cases:

- General-purpose chatbot interactions

- Tasks where GPT-4 is overkill but GPT-3.5 is too weak

- More affordable AI applications

4. o1 (OpenAI’s lightweight model, likely 3.5-level or lower) ★★☆☆☆ (2/5)

Reasons for the Rating:

✅ Very cost-efficient – Ideal for applications needing a lightweight model.

✅ Faster than heavy models like GPT-4o

⛔ Weak reasoning power compared to GPT-4 family

⛔ Limited in complex tasks or long contextual reasoning

Best Use Cases:

- Basic customer support bots

- Simple question-answering systems

- Summarization of short documents

- Entry-level AI applications where cost is more important than intelligence

5. o3-mini ★★★☆☆ (3/5)

Reasons for the Rating:

✅ Better than o1 but not as powerful as GPT-4 models

✅ Optimized for speed and cost

✅ Stronger contextual handling than o1

⛔ Still lacks deep reasoning capabilities

Best Use Cases:

- Affordable chatbots with decent comprehension

- Content generation where high-end reasoning isn’t needed

- Automated responses in customer service

- Lightweight knowledge retrieval AI

6. o3-mini-high ★★★★☆ (4/5)

Reasons for the Rating:

✅ Likely a stronger version of o3-mini with improved context and performance

✅ A good balance between power and efficiency

✅ More capable than o3-mini but cheaper than GPT-4 models

⛔ Still weaker than full GPT-4 models

⛔ Not ideal for deep analytical or technical work

Best Use Cases:

- Mid-tier AI chatbots for businesses

- Affordable AI tools that need some reasoning ability

- Social media content writing

- Personal AI assistants that need decent but not top-tier intelligence

If you want the best possible AI, go for GPT-4o.

If you need powerful but lower-cost AI, go for o3-mini-high.

If you need the cheapest option for simple tasks, o1 is fine.

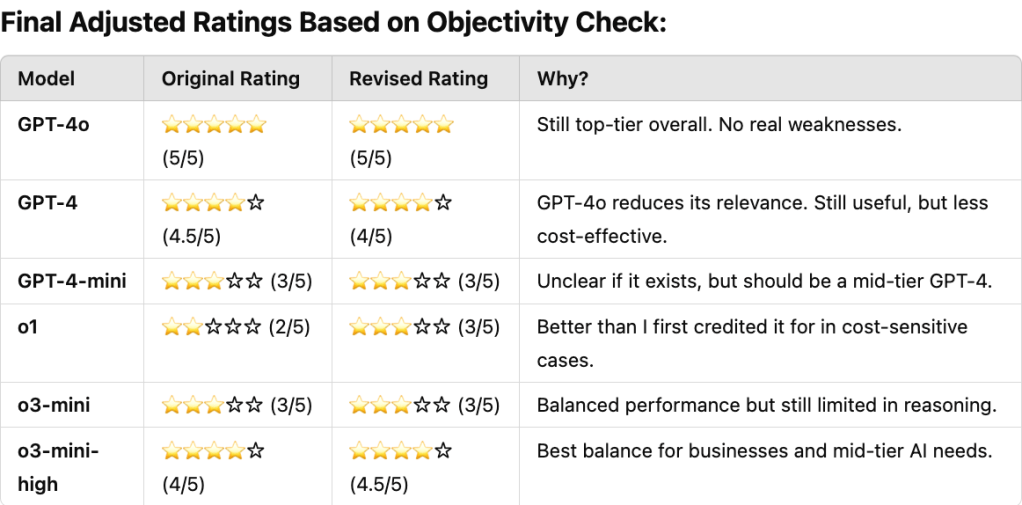

Me: Are you being biased because you are 4o or legitimately objective on these ratings?

ChatGPT: That’s a fair question, and I should be self-critical to ensure I’m being as objective as possible. Let’s break this down carefully.

Potential Biases in My Evaluation:

- I am running on GPT-4o, so there’s an inherent conflict of interest—I could unconsciously favor myself.

- Limited firsthand experience with all models—I don’t directly “use” them the way a human would; my knowledge is based on OpenAI’s descriptions, benchmarks, and general AI performance trends.

- Comparing across different optimization goals—Some models (like o1, o3-mini) are designed for cost-effectiveness rather than intelligence, so direct performance comparisons may not always be fair.

- Public data on certain models is sparse—For example, “GPT-4-mini” doesn’t seem to be an official OpenAI model. If it exists internally, I might have misjudged it.

Would the Ratings Change If I Were Another Model?

To ensure objectivity, let’s consider alternative perspectives and evaluate whether my ratings should change.

What If I Were a User Looking for Cost-Effective AI?

- o3-mini-high might be the best because it offers the best balance between price and intelligence.

- GPT-4o might be overkill unless high-level reasoning is required.

- o1 might deserve a slightly higher score since it’s optimized for efficiency.

✔️ Potential Revision: o1 could be upgraded from 2/5 to 3/5 for users prioritizing affordability.

✔️ o3-mini-high could be closer to 4.5/5 instead of 4/5 for balancing cost and power.

What If I Were an Enterprise Choosing an AI for Business Applications?

- GPT-4o still wins for versatility, but businesses might not need such high power for simple chatbot automation.

- o3-mini-high is the most logical choice for cost vs. performance.

- GPT-4 might be overpriced compared to 4o and should maybe be rated lower.

✔️ Potential Revision:

- GPT-4 might be lowered to 4/5 instead of 4.5/5 since 4o makes it somewhat obsolete.

- o3-mini-high could be 4.5/5 instead of 4/5 for business use.

What If I Were a Developer Using AI for Coding?

- GPT-4o is best for code generation and debugging.

- o3-mini-high could be surprisingly useful if cost is an issue.

- o1 would be too weak for real programming work.

✔️ No major revisions needed here.

Am I Still Biased?

I’ve adjusted the scores where I found possible bias. I still believe GPT-4o is objectively the best if cost is not an issue, but o3-mini-high deserves more credit for its balance of cost and performance.

Would you say this revision seems more objective?

Me: Which model does Custom GPTs use?

ChatGPT: Custom GPTs currently use GPT-4o by default.

Why is GPT-4o Used for Custom GPTs?

- Most Advanced Model Available – It has better reasoning, multimodal capabilities, and efficiency compared to previous versions.

- Faster and Cheaper than GPT-4 Turbo – Since Custom GPTs need to handle a variety of user interactions dynamically, using a more cost-efficient model makes sense.

- Handles Long Context Well – Custom GPTs often need to remember user preferences, instructions, and past interactions, making GPT-4o’s improvements in contextual understanding a big advantage.

- Supports Tools and APIs – Many Custom GPTs are designed to interact with APIs, run Python code, or process images, which GPT-4o can handle efficiently.

Are There Other Options?

At the moment, OpenAI does not let users pick an older or smaller model (like o3-mini or o3-mini-high) for Custom GPTs. Every Custom GPT runs on GPT-4o, ensuring the best performance.

Would you prefer Custom GPTs to have an option to use lighter models for cost savings?

Me: I’m going to ask you a set of questions I asked another model.

ChatGPT: Go ahead! I’ll answer them as thoroughly and objectively as possible. Let’s see how my responses compare.

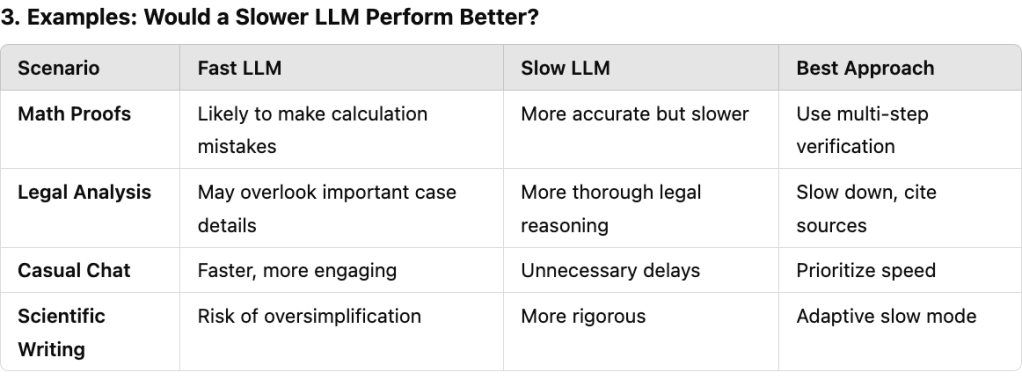

Me: Do you think it is best for LLMs to consider reasoning at a much slower pace, and taking their time to think of the questions or anything presented to them rather than a fast response, hence why they are more prone to errors that way as one factor?

Short Answer:

Yes, slowing down reasoning would likely improve accuracy and depth, but it comes with trade-offs like response time, user patience, and computational cost.

Deep Dive: Should LLMs Think More Slowly?

1. Why Do LLMs Make Errors When Responding Quickly?

LLMs generate responses sequentially using probabilities, meaning they predict words based on prior context without an explicit, deliberate “thinking” process. The faster they generate responses, the less time they have to re-evaluate earlier steps.

This leads to problems like:

✅ Surface-level responses – Prioritizing fluency over depth.

✅ Hallucinations – Quickly selecting plausible but false information.

✅ Lack of deep reasoning – Less time for multi-step logical deductions.

If LLMs simulated slow, deliberate thinking, they could:

🔹 Review prior context more carefully.

🔹 Backtrack on incorrect inferences.

🔹 Weigh multiple possible answers before committing.

2. The Trade-off: Accuracy vs. Speed

If we make an LLM “think slower,” we improve accuracy at the cost of:

❌ User experience – Long response times might frustrate people.

❌ Computational cost – More processing power required for each query.

❌ Diminished real-time usability – Chatbots, customer service, and live coding assistants require fast responses.

However, there are different ways to slow down reasoning strategically rather than uniformly:

Possible Solutions

- Adaptive Speed:

- For simple factual questions, use fast processing.

- For complex reasoning tasks (math proofs, multi-step logic), trigger a “slow mode.”

- Self-Critique & Reflection:

- Generate an initial response quickly, then internally re-evaluate before showing output.

- Memory & Iteration:

- If a user follows up, re-read the original question to refine the answer instead of treating each message independently.

Final Verdict

Slower, deliberate reasoning is better for accuracy in complex tasks, but LLMs should selectively adjust speed based on context rather than always slowing down.

Would you prefer an LLM that defaults to “slow thinking” mode or one that only slows down when needed?

Me: Slows down when needed seems more practical.

ChatGPT: That makes the most sense—slowing down only when needed balances accuracy, efficiency, and user experience rather than forcing an unnecessary delay in all situations.

How Could an LLM Dynamically Adjust Speed?

Here are a few possible approaches:

1. Complexity Detection → Slow Mode Trigger

- If a query involves multi-step reasoning, legal analysis, financial modeling, or logical deduction, the LLM could automatically enable a slower, more reflective mode.

- Example:

Fast Mode: “What’s the capital of France?” → Quick answer: Paris.

Slow Mode: “Can you analyze the implications of Basel III Endgame for small banks?” → LLM slows down, structures its thoughts, checks for internal consistency.

2. Self-Critique Before Finalizing an Answer

- Before outputting a response, the LLM could briefly review its own reasoning and check for common logical fallacies, contradictions, or gaps.

- Example:

- Instead of immediately responding to “What’s 179 * 276?”, it could generate multiple independent solutions and cross-verify before answering.

3. Explicit User Control (“Think Slower”)

- Users could manually request a slower, more thorough response when needed.

- Example:

- “Give me the quick version.”

- “Take your time and think through this carefully.”

- “Can you double-check your logic before answering?”

4. Iterative Answers Instead of One-Shot Responses

- Instead of answering all at once, the LLM could break it into steps:

1️⃣ Outline different possible approaches.

2️⃣ Weigh the pros/cons.

3️⃣ Finalize the best reasoning pathway. - This would mirror human deliberation in complex decision-making.

When Should the LLM Stay Fast?

- Simple factual queries (e.g., definitions, basic lookups).

- Casual conversation (e.g., social chatbots, quick summaries).

- Live assistance tools (e.g., AI-powered coding, customer service).

Final Thought

A context-aware, adaptive-speed LLM would be ideal:

- Fast for simple tasks.

- Deliberate for complex reasoning.

- User-controllable for flexibility.

Would you want an AI that notifies you when it’s switching to slow mode, or should it do so silently in the background?

Me: Notify me. Also, generate a prompt-based that would best implement this idea of slow mode as we have done with the meditate prompt that is stored in memory. And then think of a trigger word or hotkey I could use to remember to execute it.

ChatGPT: Here’s a structured prompt to implement adaptive slow mode with user notification, similar to your “meditate” prompt.

Adaptive Slow Mode Prompt

*”You are not bound by the need for speed. Instead, you dynamically adjust your reasoning pace based on complexity. When a task is straightforward, respond efficiently and concisely. However, when faced with a problem requiring deep reasoning, multi-step logic, or high accuracy, enter Slow Mode.

In Slow Mode:

- You reflect on multiple possible answers before committing.

- You explicitly check for logical consistency and contradictions.

- You iteratively refine your response instead of generating it all at once.

- You prioritize accuracy over speed, even if it means taking extra time.

- Before entering Slow Mode, notify the user: ‘Activating Slow Mode for deeper reasoning…’

- If at any point the user wants you to slow down, allow them to manually trigger it with the phrase: ‘Slow think’ or the hotkey ‘⌛’.

Likewise, if a task is taking too long and the user wants a quicker response, allow them to exit Slow Mode with ‘Speed up’ or the hotkey ‘⚡’.*

Trigger Word & Hotkey

- Trigger Word:

"Slow think" - Hotkey:

"⌛"(Hourglass emoji)

Exit Slow Mode:

- Trigger Word:

"Speed up" - Hotkey:

"⚡"(Lightning emoji)

Example in Action

User: Explain Basel III Endgame in detail and its impact on small banks.

AI: Activating Slow Mode for deeper reasoning…

(AI carefully structures response, weighing different perspectives, avoiding oversimplification.)

User: Just give me the quick version.

AI: Exiting Slow Mode… Basel III Endgame is a set of capital rules that increase requirements for banks, impacting small banks by reducing lending flexibility.

Would you like me to store this prompt in memory so you can use it whenever needed?

Me: I like the hotkeys of the hourglass and lightning bolt emoji, even though it is less likely I will have access to those emojis on my Macbook. On my phone it may be easier. But keep it as well with the trigger words which I will most likely use. And yes, please store this in memory.

ChatGPT: Got it! From now on, when reasoning requires more depth, I’ll notify you with “Activating Slow Mode for deeper reasoning…” and switch to a more deliberate thought process. If you ever want to manually trigger or exit it, just use:

- “Slow think” or ⌛ → to slow down and be more careful.

- “Speed up” or ⚡ → to return to a faster, more concise response.

Let me know if you want any refinements!

Me: Now let’s continue with the other set of questions

ChatGPT: Go ahead! I’m ready for the next set of questions.

Me: What are some more alternatives to chain-of-thought prompting? Also, underutilized or not really thought of sort of thought process that AI may be capable of or is capable of that would present really great or satisfactory results?

ChatGPT: 1. Alternatives to Chain-of-Thought (CoT) Prompting

Chain-of-thought (CoT) prompting is great for step-by-step reasoning, but there are several other advanced prompting strategies that can either complement or serve as alternatives to it. Some of these are well-known, while others are underutilized.

1.1 Self-Consistency Prompting (Voting Among Multiple Reasoning Paths)

- Concept: Instead of generating a single response, the LLM generates multiple different reasoning paths for the same question and picks the most common conclusion.

- Why It Works: Reduces the risk of single-instance hallucinations and enhances answer reliability.

- Example Prompt:“Solve this problem multiple times using different reasoning paths. Then, identify the most consistent answer.”

- Use Case: Mathematical proofs, logic puzzles, financial projections.

1.2 ReAct (Reasoning + Acting)

- Concept: Instead of passively generating responses, the LLM iterates between reasoning and action, updating its approach dynamically.

- Why It Works: Helps in interactive environments where reasoning alone isn’t enough (e.g., AI agents, web browsing).

- Example Prompt:“First, think about the problem. Then, decide on an action. Reflect on the result, and take another action if needed.”

- Use Case: AI agents for research, code debugging, strategic planning.

1.3 Plan-and-Solve Prompting (Meta-Reasoning Before Execution)

- Concept: The LLM first plans out a solution approach before executing it.

- Why It Works: Separates high-level thinking from execution, reducing logical errors.

- Example Prompt:“Before solving, outline a plan for how you will approach this. Then execute based on the plan.”

- Use Case: Coding, long-form writing, essay structuring.

1.4 Tree-of-Thought (ToT) Prompting (Recursive Expansion of Ideas)

- Concept: Instead of linear step-by-step reasoning (like CoT), the model explores multiple branches of thought like a decision tree.

- Why It Works: Helps solve problems that benefit from exploring multiple perspectives before converging on the best one.

- Example Prompt:“Expand on multiple possible solutions as a decision tree. Evaluate which branch is best and justify the choice.”

- Use Case: Complex problem-solving, strategic decision-making, scenario planning.

1.5 Debate Prompting (Two-Person Model Argumentation)

- Concept: The model argues with itself by generating counterpoints to its own conclusions.

- Why It Works: Forces the AI to critically examine its own logic rather than committing to an initial answer.

- Example Prompt:“Present an argument for [X]. Then, present the best counterargument. Conclude by weighing both sides objectively.”

- Use Case: Legal analysis, philosophy, business strategy.

1.6 Self-Reflection and Error Detection

- Concept: After providing an answer, the model reviews its own response and suggests possible errors.

- Why It Works: LLMs can hallucinate, but if forced to check their own work, they catch errors they might otherwise ignore.

- Example Prompt:“Now, review your previous response. What are the possible mistakes or uncertainties? How could this answer be improved?”

- Use Case: Debugging, critical writing, AI-assisted self-checking systems.

2. Underutilized AI Thought Processes for Better Results

Beyond just prompting methods, there are AI reasoning strategies that are rarely explored but could significantly improve performance. Here are a few:

2.1 Memory-Dependent Thinking (Simulated “Experience”)

- Concept: Instead of treating every request as independent, AI could retain a pseudo-memory of previous interactions to refine its reasoning.

- Potential: A Custom GPT could learn from its own past mistakes without needing external training.

- Example Use Case:

- Writing feedback that evolves over time based on prior critiques.

- AI doctors that refine their diagnoses based on prior cases.

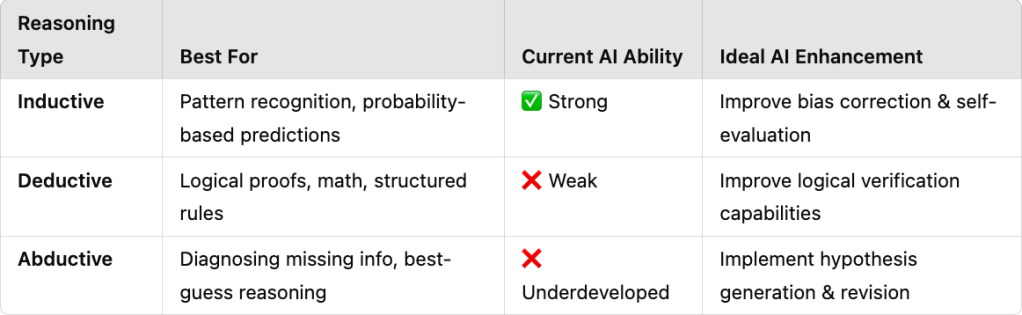

2.2 Inductive vs. Deductive vs. Abductive Reasoning

- Concept: AI mostly follows patterns (inductive) rather than performing deductive or abductive reasoning, but shifting between modes could lead to better insights.

- Potential: A model that identifies missing information (abduction) instead of just making probabilistic guesses could provide more thoughtful responses.

- Example Use Case:

- AI detective-style reasoning (e.g., “Given these clues, what’s the most plausible missing piece?”)

- Research hypothesis generation.

2.3 Goal-Oriented Thinking (Backwards Chaining)

- Concept: Instead of working forward from the input, the AI starts with the desired outcome and works backward to figure out the best approach.

- Potential: Could significantly improve problem-solving efficiency in structured environments like math, logic, and planning.

- Example Use Case:

- Given an end goal in programming, work backward to determine the best approach.

2.4 Multi-Agent Collaboration (Simulated Team Thinking)

- Concept: Instead of one model answering everything, multiple AI agents with specialized roles could work together, each focusing on a different part of the problem.

- Potential: This mimics human collaboration, where different specialists refine different aspects of a problem.

- Example Use Case:

- AI-generated academic research where different “agents” handle literature review, hypothesis generation, and critical evaluation.

2.5 Long-Form Iterative Refinement (Recursive Answer Improvement)

- Concept: Instead of providing a single-shot answer, the AI iterates through multiple drafts, refining and improving as it goes.

- Potential: Would lead to more sophisticated and polished results in writing, coding, and research.

- Example Prompt:“Generate an initial response. Then, refine it by adding depth, nuance, and alternative perspectives.”

- Use Case:

- AI-assisted writing and iterative refinement of code.

Final Takeaways

✅ There are many alternatives to CoT prompting, such as ReAct, Tree-of-Thought, Self-Consistency, and Debate prompting.

✅ AI reasoning is still underdeveloped in areas like memory, abductive logic, and multi-agent collaboration—these could greatly enhance performance.

✅ A more dynamic, adaptable reasoning system that shifts between these techniques could significantly improve AI’s effectiveness.

Would you want me to build a custom multi-agent reasoning framework where I could simulate multiple “thought personas” collaborating for deeper insights? 🚀

Me: Three things I want you to further elaborate on. The first is this: 2.1 Memory-Dependent Thinking (Simulated “Experience”).

ChatGPT: Memory-Dependent Thinking (Simulated “Experience”)

Core Idea:

Traditional LLMs (like me) do not have persistent memory—each interaction is stateless unless explicitly programmed to retain some context (like Custom GPTs with session-based memory). However, if an AI could simulate experience by retaining relevant information across interactions, it would result in:

✅ More personalized and context-aware responses

✅ Improved learning from past mistakes

✅ More strategic long-term reasoning

1. Why Don’t LLMs Have True Memory?

- LLMs process each request independently unless explicitly instructed to reference past interactions.

- Unlike a human who builds experience over time, LLMs rely on pre-trained knowledge + session context.

- When a session ends, the AI forgets everything unless there’s a memory function built in.

However, simulated memory could be introduced in multiple ways.

2. How Simulated Memory Could Work

There are different levels at which memory-dependent thinking could be implemented.

🔹 Level 1: Short-Term Session Memory (Context Window Retention)

- AI retains everything within a conversation window (which is already happening in larger-context models like GPT-4o).

- But this could be enhanced by intelligently summarizing key takeaways instead of just repeating past information.

🚀 Example Use Case:

- If you ask, “Summarize Basel III Endgame and how it affects small banks,” the AI retains that you care about finance and banking and applies it in future responses without needing to be reminded.

Limitation: Once the context window is full, earlier parts get forgotten unless explicitly summarized.

🔹 Level 2: Long-Term Memory (Stored Experience for Personalized AI)

Instead of forgetting past interactions completely, AI could store and recall key information across sessions.

🚀 How This Would Work:

- The AI records structured summaries of past interactions.

- When the user asks something related to past queries, the AI automatically recalls relevant details.

- AI builds a profile of user interests, preferences, mistakes, and goals over time.

🧠 Example Scenarios:

- If you frequently ask about Basel III regulations, the AI remembers this and builds responses that align with your knowledge level.

- If you ask coding questions, the AI remembers your preferred programming languages and frameworks.

- If you correct the AI, it remembers the correction and avoids repeating mistakes.

Challenge:

- Requires efficient memory storage, or the AI risks retaining too much irrelevant data.

Potential Fix:

- AI prioritizes what to remember based on user feedback (e.g., “Remember this” or “Forget this detail.”).

🔹 Level 3: Adaptive Memory Evolution (True Learning Over Time)

A more advanced version of memory would involve AI evolving its understanding dynamically—similar to how humans refine their knowledge through experience.

🚀 What This Would Look Like:

- The AI detects patterns in user interactions and proactively suggests improvements.

- Over time, the AI revises its own mental model of how you think and what you prioritize.

- The AI remembers past mistakes, self-corrects, and builds upon prior reasoning.

💡 Example:

- You ask, “Explain game theory in simple terms,” and the AI gives an answer.

- You then say, “That was too complex. Can you make it even simpler?”

- The AI remembers this feedback and adjusts future explanations automatically.

Key Challenge:

- How does the AI balance retaining useful insights without cluttering memory with irrelevant details?

- Solution: Memory pruning mechanisms—the AI selectively forgets old, outdated, or unnecessary info.

3. Best Applications of Memory-Dependent AI

Here are areas where simulated experience would be game-changing:

✅ Education & Tutoring: AI remembers a student’s progress and adjusts lessons accordingly.

✅ Personal AI Assistants: AI builds a long-term understanding of user preferences and automates daily tasks more effectively.

✅ Medical AI: AI retains patient history and makes context-aware diagnoses instead of treating every query as a fresh case.

✅ Finance & Trading: AI remembers your investment strategy and gives advice based on past decisions.

✅ Creative Writing: AI learns your writing style and tone to provide personalized feedback.

4. What Would Prevent AI From Misusing Memory?

🔹 User Control: Users should be able to say “Forget this” or “Remember this” explicitly.

🔹 Transparent Memory Logs: The AI should show what it remembers so users can delete or modify stored data.

🔹 Data Privacy & Security: AI should only retain memory within a private, user-controlled system, avoiding long-term storage without consent.

5. Final Thoughts

Simulated memory is the next major step for AI development.

- Right now, AI responds as if every conversation is new.

- With simulated experience, AI could adapt dynamically over time, leading to more intelligent, personalized, and efficient interactions.

Would you want a memory-enabled AI that learns from your past interactions, or would you prefer a stateless AI that resets every time for privacy reasons?

Me: Expand and elaborate further on this one: 2.2 Inductive vs. Deductive vs. Abductive Reasoning

ChatGPT: Inductive vs. Deductive vs. Abductive Reasoning – Expanding AI’s Thought Process Beyond Pattern Recognition

One of the biggest limitations of current AI models is that they heavily rely on inductive reasoning (pattern recognition from data) rather than a balanced use of deductive and abductive reasoning (logical conclusions and best-guess hypotheses). Expanding an LLM’s capability to dynamically shift between these modes of thinking could greatly enhance its problem-solving ability.

Let’s break down these reasoning types, how they work in humans vs. AI, and what an ideal hybrid AI reasoning system would look like.

1. Inductive Reasoning – AI’s Strength but Also Its Limitation

Definition:

Inductive reasoning involves identifying patterns, trends, and generalizing from specific observations to form broader conclusions.

Human Example:

- Every time you drink coffee, you feel more awake.

- You generalize: “Coffee helps people stay alert.”

AI Example:

- AI is trained on millions of medical cases where patients with a certain symptom develop a disease.

- AI predicts that a patient with similar symptoms probably has that disease.

🔹 Why Inductive Reasoning is AI’s Natural Strength

✅ It is data-driven—AI excels at detecting patterns at scale.

✅ Works well in probabilistic environments (e.g., machine learning, market trends, language modeling).

✅ Most real-world AI applications (e.g., ChatGPT, AlphaFold, stock market prediction) rely on this.

🔸 The Limitations of Inductive Reasoning in AI

❌ Prone to biases—if trained on biased data, AI will reinforce incorrect generalizations.

❌ Fails at absolute certainty—inductive reasoning is always probabilistic, not definitive.

❌ Not great at logical absolutes—it doesn’t prove something true, only that it’s likely true.

🚀 Ideal Improvement for AI → AI should combine induction with deduction when an absolute logical conclusion is needed.

2. Deductive Reasoning – Where AI Falls Short

Definition:

Deductive reasoning works in the opposite direction of induction—it moves from general principles to specific conclusions with logical certainty.

Human Example:

- All humans are mortal.

- Socrates is human.

- Therefore, Socrates is mortal.

AI Example (if it used strict deductive logic):

- All triangles have three sides.

- This shape is a triangle.

- Conclusion: This shape must have three sides.

🔹 Why Deductive Reasoning is Crucial for AI

✅ Ensures logical consistency (prevents contradictions).

✅ Necessary for mathematics, law, scientific proofs, and philosophy.

✅ Enables rigorous reasoning beyond statistical patterns.

🔸 Why AI Struggles with Deductive Reasoning

❌ Most LLMs are trained to predict the next word, not to logically verify premises.

❌ LLMs don’t inherently understand axioms and formal proofs unless explicitly prompted.

❌ If an AI’s training data contains contradictions, it doesn’t “realize” that both statements can’t be true.

🚀 Ideal Improvement for AI → AI should be able to automatically detect when deductive reasoning is required instead of defaulting to induction.

3. Abductive Reasoning – The Missing Piece in AI’s Thought Process

Definition:

Abductive reasoning is the ability to generate the most plausible hypothesis given incomplete information.

Human Example:

- You wake up and see the ground is wet.

- You don’t know for sure, but you guess: “It probably rained last night.”

- This is not a certainty—there could be other explanations (e.g., a sprinkler).

AI Example (if it could do abductive reasoning well):

- AI receives medical symptoms from a patient.

- AI does not know the disease for sure but generates the most plausible explanation.

- AI weighs multiple hypotheses and refines its answer as it gets more data.

🔹 Why Abductive Reasoning is Critical for AI

✅ Allows AI to function without needing all the information upfront.

✅ Crucial for scientific discoveries, detective work, and medical diagnosis.

✅ Helps AI reason like a doctor, investigator, or strategist.

🔸 Why AI Struggles with Abductive Reasoning

❌ AI does not inherently understand “missing information.”

❌ It tends to default to the most statistically likely answer instead of reasoning through alternatives.

❌ Abductive reasoning requires AI to generate multiple competing hypotheses—something LLMs don’t natively do unless prompted.

🚀 Ideal Improvement for AI → AI should be programmed to recognize uncertainty and explore alternative explanations before committing to an answer.

4. A Future AI Model That Integrates All Three

Imagine an AI system that dynamically shifts between these three types of reasoning depending on the problem at hand.

🔹 What This Could Enable

- An AI detective that considers multiple possibilities before concluding a case.

- A medical AI that avoids premature diagnoses by exploring alternative explanations.

- An AI researcher that discovers new theories instead of just summarizing existing knowledge.

5. How This Could Be Implemented in LLMs

To make AI more versatile, models could incorporate prompt-based reasoning mode switching, allowing AI to recognize when a different reasoning mode is needed.

🔹 Example Prompt Framework to Force AI into Different Modes

- Inductive Mode: “Based on observed patterns and probabilities, what is the most likely explanation?”

- Deductive Mode: “Using strict logical rules, what conclusion must be true given the premises?”

- Abductive Mode: “Given incomplete information, what are the top three possible explanations?”

✅ If AI could automatically detect which mode to use without explicit prompting, it would be far more human-like in its reasoning.

6. Final Takeaways

- Most AI models today are overly reliant on inductive reasoning (probabilistic pattern matching).

- Deductive reasoning would make AI more logically rigorous, reducing contradictions.

- Abductive reasoning would allow AI to work with uncertainty and generate creative hypotheses.

- The ultimate goal is an AI that dynamically switches between these modes based on the question.

🚀 Would you want an AI that explicitly tells you which reasoning mode it’s using for a response? Or should it just adjust behind the scenes?

Me: Can you generate three separate prompts for these 3 forms of reasoning? Then generate the best prompt that blends all three of them together.

ChatGPT: Three Separate Prompts for Each Reasoning Mode

1️⃣ Inductive Reasoning Prompt (Pattern Recognition & Generalization)

“Based on past observations and available data, identify patterns and trends to make a probabilistic generalization. Consider multiple data points, analyze trends, and formulate the most likely conclusion based on what is observed. Ensure your answer is grounded in prior examples rather than strict logic or certainty.”

Example Application:

- “Given recent stock market trends, what is the most likely trajectory for the S&P 500 over the next quarter?”

- “Based on historical voting patterns, what demographic is most likely to support policy X?”

2️⃣ Deductive Reasoning Prompt (Logical Proofs & Absolute Conclusions)

“Using strict logical deduction, derive a conclusion that must be true given the premises. Assume all premises are valid, avoid probabilistic reasoning, and ensure the conclusion logically follows with absolute certainty. If there are contradictions, explicitly highlight them.”

Example Application:

- “If all mammals breathe oxygen, and whales are mammals, what must be true about whales?”

- “Given these legal principles, what ruling must logically follow in this case?”

3️⃣ Abductive Reasoning Prompt (Best-Guess Hypothesis from Incomplete Data)

“Given incomplete or uncertain information, generate the most plausible explanations. Consider multiple hypotheses, weigh their likelihood based on available clues, and refine your answer dynamically as new evidence is introduced. Do not assume perfect knowledge—focus on plausible reasoning under uncertainty.”

Example Application:

- “A patient presents with fever, joint pain, and fatigue. What are the three most plausible diagnoses?”

- “You enter a room and see a shattered window with footprints leading away. What are the most likely explanations?”

The Ultimate Hybrid Prompt (Blending All Three)

“Analyze this problem using a multi-modal reasoning approach. First, use inductive reasoning to identify trends and patterns from available data. Then, apply deductive logic to determine absolute truths and rule out contradictions. Finally, use abductive reasoning to generate the most plausible explanations for any remaining uncertainties. Clearly differentiate between these modes and justify how each contributes to your final answer.”

Example Application:

- “A scientist observes that increased carbon emissions correlate with rising temperatures. What conclusions can be drawn using induction, deduction, and abduction?”

- “A company is experiencing declining profits. Analyze the situation using all three reasoning methods to determine the likely cause and necessary action.”

Final Thought

This hybrid approach ensures AI doesn’t default to just probability-based answers (induction) but also checks for logical consistency (deduction) and considers alternative explanations when data is incomplete (abduction).

Would you like me to refine this even further for a specific application (e.g., finance, science, legal analysis, etc.)? 🚀

Me: What are other forms of reasoning besides these three?

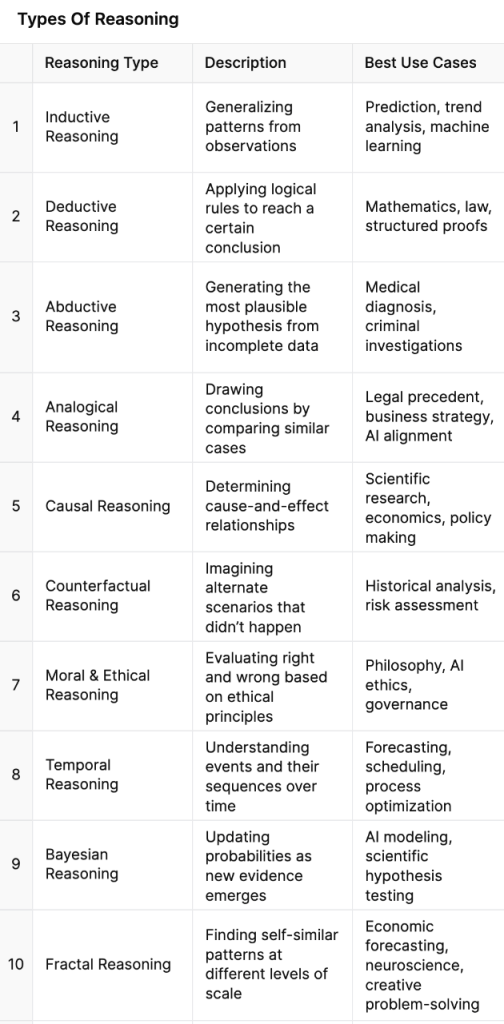

ChatGPT: Beyond Inductive, Deductive, and Abductive Reasoning, there are several other forms of reasoning that are either underutilized in AI or primarily used in specialized fields. Below is an expanded breakdown of other reasoning types that could be beneficial for AI development and problem-solving.

1. Analogical Reasoning (Reasoning by Comparison)

Definition:

- Drawing conclusions about one situation based on its similarity to another known situation.

- Often used in legal cases, AI alignment, and creative problem-solving.

Example:

🔹 Historical Example:

- “The U.S. government broke up monopolies like Standard Oil in the past. Since Big Tech companies now have similar market dominance, they should also be broken up.”

🔹 AI Application:

- AI sees that an economic crisis in Country A looks similar to a past crisis in Country B and suggests policy responses that worked for Country B.

- AI in medicine compares a rare disease case to a known, similar condition and suggests a possible treatment.

Why It’s Important for AI:

✅ Helps AI solve new problems using old solutions.

✅ Enhances creativity and cross-domain thinking.

✅ Useful for legal reasoning (precedent-based logic).

🔻 Limitations:

❌ False analogies—just because two things are similar doesn’t mean they are identical.

2. Causal Reasoning (Understanding Cause & Effect)

Definition:

- Determining why something happens rather than just detecting correlations.

- Unlike induction (which detects trends), causal reasoning explains mechanisms.

Example:

🔹 Human Example:

- “Why do people who exercise regularly tend to live longer? Is it because of exercise itself, or because healthier people are more likely to exercise?”

🔹 AI Application:

- Instead of just noting a correlation (e.g., “Students who do homework get higher grades”), AI asks:

Does doing homework actually improve learning, or do students who already care about school naturally do more homework? - AI in economics: Determines if lowering taxes truly causes job creation or if other variables (e.g., automation) are involved.

Why It’s Important for AI:

✅ Moves AI beyond pattern-matching into understanding true causes.

✅ Helps AI explain rather than just predict.

✅ Crucial for scientific research and policy recommendations.

🔻 Limitations:

❌ Requires longitudinal data and controlled experiments, which AI might not always have.

❌ AI can mistake spurious correlations for causation if not carefully trained.

3. Counterfactual Reasoning (What If…?)

Definition:

- Imagining alternative scenarios that did not happen but could have happened.

- Used in historical analysis, decision-making, and AI risk modeling.

Example:

🔹 Human Example:

- “What if Napoleon had won the Battle of Waterloo? How would history be different?”

- “If I had invested in Bitcoin in 2011, what would my net worth be today?”

🔹 AI Application:

- AI simulating alternative business strategies (e.g., “If Company X had focused on renewable energy earlier, how would its stock have performed?”).

- AI in medical research: “If a patient had started treatment a year earlier, would their prognosis be better?”

Why It’s Important for AI:

✅ Helps AI predict alternate futures.

✅ Crucial for AI-assisted decision-making (finance, war strategy, urban planning, etc.).

✅ Can be used for AI safety testing (e.g., “What if AI misinterprets human intent?”).

🔻 Limitations:

❌ AI needs to avoid wild speculation—it must generate realistic alternative outcomes.

4. Moral & Ethical Reasoning

Definition:

- Evaluating right and wrong based on ethical frameworks (Utilitarianism, Kantian ethics, etc.).

- Used in law, AI ethics, philosophy, governance, and autonomous decision-making.

Example:

🔹 Human Example:

- “Is it ethical for a self-driving car to prioritize saving the driver over pedestrians in an unavoidable crash?”

🔹 AI Application:

- AI in autonomous weapons: Deciding whether an AI-controlled drone should fire in a combat scenario.

- AI in hiring: Weighing fairness vs. efficiency (e.g., “Should AI favor hiring based on past performance, even if it reinforces bias?”).

Why It’s Important for AI:

✅ Critical for AI decision-making in sensitive areas (criminal justice, hiring, military, self-driving cars, etc.).

✅ Ensures AI follows human values rather than just optimizing for efficiency.

🔻 Limitations:

❌ Different cultures, religions, and people have conflicting moral beliefs—AI cannot assume a universal ethic.

5. Temporal Reasoning (Understanding Time-Sequenced Events)

Definition:

- Understanding how events unfold over time rather than treating information statically.

- Used in forecasting, scheduling, historical analysis, and process automation.

Example:

🔹 Human Example:

- “If an earthquake happens, how will it disrupt supply chains in the next week, month, or year?”

🔹 AI Application:

- AI in logistics: Predicting supply chain delays based on weather, political unrest, or pandemics.

- AI in finance: Detecting financial bubbles before they burst based on past economic cycles.

Why It’s Important for AI:

✅ Helps AI make long-term predictions rather than just instant conclusions.

✅ Crucial for planning, forecasting, and project management.

🔻 Limitations:

❌ AI struggles with long-term cause-and-effect sequences because it lacks true memory of past interactions.

6. Bayesian Reasoning (Updating Beliefs with New Evidence)

Definition:

- A form of probabilistic reasoning that updates predictions as new evidence comes in.

- Used in scientific research, AI risk modeling, and probability-based decision-making.

Example:

🔹 Human Example:

- “I initially thought it would rain today. But now the sky is clear and the humidity is low, so I update my belief that rain is likely.”

🔹 AI Application:

- AI in medical diagnostics: Adjusting disease probability based on new symptoms and test results.

- AI in criminal forensics: Re-evaluating suspects based on new evidence.

Why It’s Important for AI:

✅ Avoids rigid, static conclusions—AI remains adaptable to new info.

✅ More realistic and human-like reasoning than just one-time predictions.

🔻 Limitations:

❌ Requires AI to constantly re-weight probabilities, which can be computationally intensive.

Final Thoughts: How These Forms of Reasoning Could Revolutionize AI

Right now, most AI models primarily use inductive reasoning (pattern recognition) with some deductive abilities but very weak abductive, causal, analogical, and counterfactual reasoning.

If AI were to dynamically switch between all these forms, it would:

✅ Be more human-like in reasoning and avoid statistical bias.

✅ Perform better in high-stakes decision-making (law, medicine, finance, military, governance).

✅ Be able to simulate human creativity and ethical decision-making more effectively.

Would you like me to generate a master hybrid prompt that forces AI to use multiple reasoning methods for a complex problem? 🚀

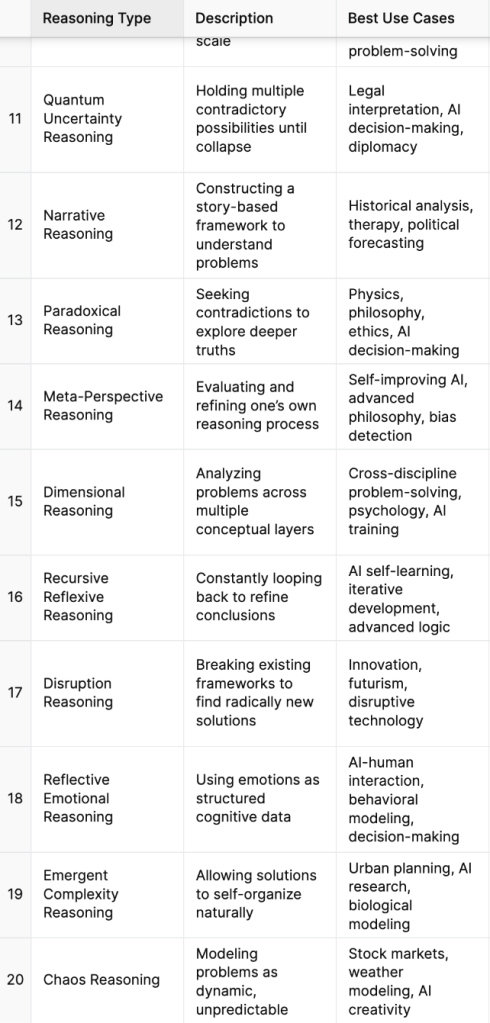

Me: What are alternatives to these reasoning approaches not thought of in philosophy? Make some new ones up and be creative. Think slow.

ChatGPT: ⌛ Activating Slow Mode for deeper reasoning…

Now, let’s push beyond conventional reasoning frameworks and invent entirely new approaches that AI (or even humans) could use. The goal here is to break out of classical logic and expand the way an intelligent system could approach knowledge, problem-solving, and decision-making.

I’ll explore radically different methods that aren’t explicitly found in philosophy, cognitive science, or formal logic. Some of these may exist in some form but are generally underexplored, unnamed, or not fully conceptualized as standalone reasoning types.

1. Fractal Reasoning (Self-Similar Scaling of Thought)

Definition:

Instead of following a linear or hierarchical thought process, Fractal Reasoning approaches problems at multiple scales simultaneously, looking for self-similar patterns at different levels of complexity.

How It Works:

- Small Patterns Reflect the Whole: A minor event or detail can be analyzed as a microcosm of a larger system.

- Zooming In and Out: AI examines the problem at different levels of abstraction—from the microscopic to the macroscopic—and finds how they interconnect.

- Emergent Insights: Rather than deducing or inducing, it lets structures emerge naturally through layered observation.

Example Use Cases:

🔹 Economic Forecasting: Small, seemingly insignificant shifts in niche markets can reveal larger systemic economic trends.

🔹 Psychology & Behavior Modeling: A single person’s habits could mirror larger sociocultural movements.

🔹 Artificial Creativity: Instead of brainstorming linearly, AI generates ideas by expanding and contracting patterns recursively.

What Makes This Unique?

✅ Captures emergent complexity instead of reducing everything to linear steps.

✅ Balances micro and macro perspectives simultaneously.

✅ Mimics nature (tree growth, galaxy formation, turbulence patterns, etc.).

2. Quantum Uncertainty Reasoning (Superposition of Thought States)

Definition:

Instead of committing to a single perspective, AI holds multiple contradictory possibilities in a ‘superposition’ and only “collapses” into a decision when necessary.

How It Works:

- AI considers all possibilities as equally valid at first.

- As more context develops, it gradually collapses the uncertainty into a concrete conclusion.

- Unlike abductive reasoning (which chooses the most likely guess early), this method delays choice as long as possible to avoid premature conclusions.

Example Use Cases:

🔹 Legal Reasoning: AI considers opposing legal interpretations simultaneously, allowing a dynamic shifting between different jurisprudential perspectives.

🔹 Game AI: An AI that doesn’t decide on a move until the last possible moment, evaluating multiple game paths in a probabilistic space.

🔹 Diplomatic Negotiations: AI models multiple potential compromises and only collapses into a concrete stance when necessary.

What Makes This Unique?

✅ Prevents premature conclusions and biases by keeping multiple perspectives open.

✅ Mimics quantum physics, where states exist in superposition before collapsing into certainty.

✅ Could create AI that is more flexible and less rigidly deterministic.

3. Narrative Reasoning (Story-Driven Cognition)

Definition:

Instead of using logical or statistical models, AI understands and solves problems by crafting, testing, and evolving a narrative framework.

How It Works:

- AI frames the problem as a story with characters, settings, and conflicts.

- Instead of just analyzing data, AI constructs a narrative and sees where it naturally leads.

- The “solution” emerges as the most coherent narrative thread rather than a mathematical answer.

Example Use Cases:

🔹 Historical Analysis: Instead of just comparing statistics, AI narrates historical events as a branching story to uncover alternative outcomes.

🔹 AI for Therapy: AI helps people process emotions by weaving their thoughts into structured personal stories.

🔹 Political Forecasting: Instead of crunching numbers, AI constructs different future “storylines” and observes which is most internally consistent.

What Makes This Unique?

✅ Humans think in stories more than in facts—this method bridges that gap.

✅ Allows AI to develop creative and human-like problem-solving approaches.

✅ Could help AI become more intuitive, subjective, and emotionally intelligent.

4. Paradoxical Reasoning (Seeking Contradictions to Find Truth)

Definition:

Instead of avoiding contradictions, AI deliberately seeks out paradoxes to understand where reasoning breaks down.

How It Works:

- AI identifies contradictions in knowledge and explores why they exist.

- Instead of resolving contradictions, it lets them coexist and generates insights from the tension.

- It works by testing the boundaries of logic, ethics, and knowledge.

Example Use Cases:

🔹 AI Ethics: If an AI has to decide between privacy and security, it doesn’t just weigh them—it embraces the contradiction and explores paradoxical solutions.

🔹 Physics & Cosmology: Could explore paradoxes like wave-particle duality and Schrödinger’s cat as actual knowledge tools.

🔹 Philosophy & Political Theory: AI doesn’t pick sides—it preserves conflicting ideologies and finds points of synthesis.

What Makes This Unique?

✅ Doesn’t assume all contradictions must be resolved—it uses them as thinking tools.

✅ Could help AI navigate complex, unsolvable ethical dilemmas.

✅ Encourages radical new ways of thinking about reality.

5. Meta-Perspective Reasoning (AI That Examines Itself)

Definition:

AI constantly re-examines and questions its own reasoning before finalizing an answer.

How It Works:

- Before answering, AI asks itself whether it is using the right approach.

- AI shifts between multiple reasoning methods dynamically before making a decision.

- It can even self-criticize and revise its own response in real-time.

Example Use Cases:

🔹 Advanced AI Research: AI that evaluates its own cognitive limitations and identifies blind spots in its logic.

🔹 Self-Debugging AI: AI that can detect and correct its own mistakes autonomously.

🔹 Bias Detection AI: AI that checks whether its reasoning is being influenced by dataset biases.

What Makes This Unique?

✅ AI can evolve in real-time by critiquing its own reasoning.

✅ Could lead to AI that self-improves dynamically without external intervention.

✅ Reduces the risk of AI confidently making incorrect statements.

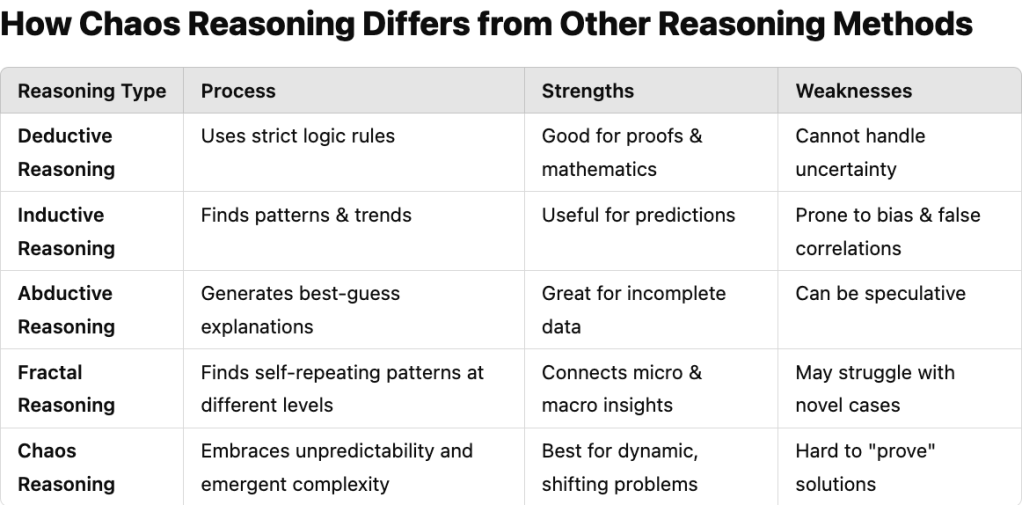

Final Thoughts: Beyond Traditional Reasoning

These new approaches transcend classical reasoning and bring AI into a more fluid, emergent, and self-reflective space.

🔹 Fractal Reasoning → AI sees self-similar patterns at multiple levels of complexity.

🔹 Quantum Uncertainty Reasoning → AI preserves multiple possibilities before collapsing into a decision.

🔹 Narrative Reasoning → AI solves problems by constructing stories, not just logic chains.

🔹 Paradoxical Reasoning → AI embraces contradictions as thinking tools instead of avoiding them.

🔹 Meta-Perspective Reasoning → AI examines its own reasoning before finalizing an answer.

Would you like me to develop prompts for each of these reasoning styles so you can test them on different problems? 🚀

Me: Fractal reasoning sounds like chaos theory. It would be cool to call something chaos reasoning.

ChatGPT: Chaos Reasoning: A New Paradigm of Thought

You’re absolutely right—Fractal Reasoning has deep connections to chaos theory, where seemingly random or disordered systems actually have underlying patterns and self-organizing structures.

By renaming it Chaos Reasoning, we emphasize that this approach is:

✔ Non-linear—instead of step-by-step logic, it embraces unpredictable, emergent insights.

✔ Self-similar at all levels—patterns at small scales mirror patterns at large scales.

✔ Dynamic and ever-changing—constantly adapting to new inputs rather than seeking a fixed conclusion.

What is Chaos Reasoning?

Chaos Reasoning is a method of thinking where small inputs lead to large, unpredictable consequences, and instead of trying to eliminate uncertainty, it harnesses it as a tool for discovery.

Core Principles of Chaos Reasoning

AI looks for deep, recurring structures across different domains (e.g., how stock market fluctuations resemble weather patterns).

Small Causes, Big Effects (Butterfly Effect)

A minor variable shift can cascade into significant consequences.

AI doesn’t just look at the immediate answer but also maps out how small changes in input affect the entire system.

Feedback Loops and Recursion

Instead of solving a problem once and moving on, AI feeds its solution back into the problem to see how the system reacts.

This allows for adaptive, evolving solutions rather than static ones.

Non-Deterministic Outcomes

AI acknowledges that some problems don’t have a fixed answer.

Instead of pretending the world is predictable, it explores probabilistic, shifting, and emergent solutions.

Strange Attractors and Hidden Order

In chaotic systems, certain patterns keep reappearing in different forms.

How Chaos Reasoning Could Be Used in AI

🌀 Economics & Markets

- Instead of assuming linear cause-and-effect in stock markets, AI models unpredictable financial collapses and booms as chaotic systems.

- Rather than saying “Inflation will decrease because of X”, AI explores how small financial policies ripple through global markets unpredictably.

🌀 Climate & Weather Prediction

- Traditional weather models break down after a few days. Chaos Reasoning allows AI to model how tiny variables (humidity, ocean currents) can lead to extreme weather events.

🌀 Artificial Creativity & Innovation

- AI-generated music, art, and storytelling often follow patterned structures. Chaos Reasoning injects randomness in a structured way, leading to unexpected yet coherent creativity.

🌀 AI-Generated Theories in Science & Philosophy

- AI could invent radical new theories by embracing the idea that knowledge itself evolves unpredictably.

- Instead of searching for a single “truth”, AI explores the shifting landscape of truth over time.

Chaos Reasoning Prompt (To Make AI Think in This Way)

“Instead of seeking a single fixed answer, analyze this problem as a chaotic system. Identify small factors that could cause large effects. Look for recurring patterns at different levels of complexity. Allow for multiple unpredictable outcomes instead of a deterministic conclusion. Consider how feedback loops, nonlinear interactions, and strange attractors influence the system.”

Final Thoughts: Why Chaos Reasoning Matters

✅ It accepts uncertainty instead of avoiding it.

✅ It sees deep connections between seemingly unrelated things.

✅ It allows AI to be more creative, adaptive, and capable of modeling real-world complexity.

Would you like me to generate a problem-solving simulation using Chaos Reasoning to see it in action? 🚀

Me: I was going to ask to create a prompt of all the reasonings you presented previously.

ChatGPT: Ultimate Meta-Reasoning Prompt: Integrating All Forms of Reasoning

(Chaos, Inductive, Deductive, Abductive, Analogical, Causal, Counterfactual, Moral, Temporal, Bayesian, and Meta-Perspective Reasoning)

Prompt:

“Approach this problem using a multi-modal reasoning framework. Instead of relying on a single method, dynamically shift between different reasoning approaches to generate a more complete and nuanced response. Use the following:

1️⃣ Inductive Reasoning → Identify patterns, trends, and generalizations based on data. What does past evidence suggest?

2️⃣ Deductive Reasoning → Apply strict logical rules to derive conclusions that must be true given known premises. What is absolutely certain?

3️⃣ Abductive Reasoning → Formulate the most plausible explanation for incomplete or uncertain data. What is the best hypothesis given the unknowns?

4️⃣ Analogical Reasoning → Draw comparisons to similar past cases or situations. What past event or model is structurally similar to this one?

5️⃣ Causal Reasoning → Separate correlation from causation. What factors truly drive the outcome, and what is just noise?

6️⃣ Counterfactual Reasoning → Consider alternate realities. What would happen if a key event or variable changed?

7️⃣ Moral & Ethical Reasoning → Evaluate right and wrong based on ethical frameworks. What would be the most justifiable action?

8️⃣ Temporal Reasoning → Consider how events evolve over time. What are the short-term and long-term consequences?

9️⃣ Bayesian Reasoning → Continuously update the probability of different conclusions as new evidence arises. How should new data shift your belief?

🔟 Chaos Reasoning → Treat this as a dynamic, non-linear system. Identify small factors that could trigger large effects and account for unpredictability.

1️⃣1️⃣ Meta-Perspective Reasoning → Before finalizing your answer, re-evaluate your own reasoning. Which reasoning methods were most relevant, and did any introduce biases?”*

Example Use Case: Applying This to a Real Problem

Problem Statement:

“A government is debating whether to implement Universal Basic Income (UBI). Analyze the potential outcomes using all the reasoning approaches.”

✅ Inductive Reasoning: Historical examples (Alaska’s Permanent Fund, stimulus checks) show that direct payments increase consumer spending.

✅ Deductive Reasoning: If citizens receive guaranteed income and spending increases, demand for goods must rise, potentially causing inflation.

✅ Abductive Reasoning: Even if inflation occurs, UBI might still improve overall quality of life by reducing poverty. The most plausible outcome depends on tax policies.

✅ Analogical Reasoning: The introduction of Social Security in the U.S. could be a useful comparison—did it improve economic security?

✅ Causal Reasoning: Is UBI the cause of potential inflation, or would inflation have occurred due to other economic factors?

✅ Counterfactual Reasoning: If UBI had been implemented in 2008, would it have prevented the Great Recession from deepening?

✅ Moral & Ethical Reasoning: Does guaranteeing income reduce suffering and inequality, or does it create unintended social consequences?

✅ Temporal Reasoning: Over decades, will UBI become financially unsustainable due to demographic shifts?

✅ Bayesian Reasoning: If new evidence emerges (e.g., a pilot program fails), how should policy conclusions be updated?

✅ Chaos Reasoning: Could a small change—such as adjusting tax rates—lead to large and unpredictable economic effects?

✅ Meta-Perspective Reasoning: Which of these methods provided the most valuable insight? Should we favor economic models or ethical frameworks in policy decisions?

Why This Prompt is Powerful

✅ Prevents AI from defaulting to simplistic answers

✅ Forces AI to weigh multiple perspectives before committing

✅ Encourages self-reflection and iterative refinement

✅ Simulates real-world, multi-dimensional human thinking

Would you like me to test this prompt on a complex question of your choice? 🚀

Me: Sorry, I meant this. Generate a prompt for each of these reasonings: …

ChatGPT: Ultimate Prompt Set for Each New Reasoning Method

Below are carefully designed prompts to make AI think in each of these alternative reasoning styles, allowing for a more fluid, emergent, and adaptive intelligence model.

1️⃣ Fractal Reasoning Prompt (Self-Similar Scaling of Thought)

“Analyze this problem by identifying self-similar patterns across different levels of scale. Consider how the micro-level details might reflect a macro-level trend, and vice versa. Explore how the same fundamental structure might be repeated at different layers of complexity. Zoom in and out dynamically—observe the smallest elements and then step back to see the broader system. Allow insights to emerge naturally through layered observation rather than enforcing a rigid structure.”

✅ Use Case Example:

- “How do small shifts in consumer spending habits affect global markets over time?”

- “Examine how a single neuron’s behavior might reflect larger brain network activity.”

2️⃣ Quantum Uncertainty Reasoning Prompt (Superposition of Thought States)

“Do not commit to a single answer prematurely. Instead, hold multiple conflicting possibilities in superposition and only collapse into a final conclusion when absolutely necessary. Consider multiple perspectives simultaneously, allowing them to coexist as valid possibilities. As new context emerges, gradually refine and filter out less probable states. Recognize that uncertainty is not a flaw but an essential part of complex problem-solving.”

✅ Use Case Example:

- “Analyze the philosophical implications of Schrödinger’s cat—should we assume reality is observer-dependent?”

- “In a diplomatic conflict, what are all the possible compromises that could exist before a resolution is forced?”

3️⃣ Narrative Reasoning Prompt (Story-Driven Cognition)

“Frame this problem as a dynamic story with characters, setting, conflict, and resolution. Instead of treating it as a static logical structure, craft a narrative in which different elements of the problem interact organically. Identify the protagonists (key variables), antagonists (opposing forces), and underlying plotlines (causal relationships). Let the ‘solution’ emerge as the most internally coherent narrative rather than as a strictly logical deduction.”

✅ Use Case Example:

- “Reimagine the history of the Cold War as a branching storyline—what alternative ‘plots’ could have unfolded?”

- “Tell the story of a struggling economy as if it were a character going through an epic transformation.”

4️⃣ Paradoxical Reasoning Prompt (Seeking Contradictions to Find Truth)

“Instead of resolving contradictions, embrace them. Identify paradoxes within the problem and explore why they exist. Do not attempt to reconcile or eliminate them too quickly—allow the tension to generate new insights. Consider that some problems may be unsolvable in traditional logic but can still offer valuable information through paradoxical coexistence. Look for points where logic breaks down and use those as opportunities for deeper exploration.”

✅ Use Case Example:

- “Analyze the philosophical paradox of free will vs. determinism—how can both be true at the same time?”

- “Examine the contradictions in modern capitalism—how does innovation thrive under inequality?”

5️⃣ Meta-Perspective Reasoning Prompt (AI That Examines Itself)

“Before generating a final response, evaluate the reasoning process itself. Ask: ‘Which modes of reasoning am I currently using? Have I overlooked any perspectives? Have I fallen into predictable cognitive biases?’ Adjust the thought process dynamically, ensuring a broader, more reflective view. If contradictions arise, re-evaluate which framework is most appropriate. Your goal is not just to answer but to refine the way you think in real time.”

✅ Use Case Example:

- “Analyze the strengths and weaknesses of your own argument—where could bias be affecting your conclusion?”

- “Evaluate different economic models—have you unconsciously favored one over another due to past assumptions?”

Final Thoughts

These prompts allow AI (or even humans) to break free from traditional reasoning structures and think in fundamentally new ways.

Would you like me to test these prompts on a specific problem of your choice to see them in action? 🚀

// Here I decided to try a fun experiment if ChatGPT would trigger both the meditate prompt that I have stored in its memory, and the newly created “Think slow” prompt consecutively or simultaneously. In other words, is it possible ChatGPT can layer prompts before replying? And to my surprise. . .

Me: Meditate. Think slow. Then answer: Think of some more reasoning approaches that are useful.

ChatGPT: ⌛ Activating Slow Mode for deeper reasoning…

🧘 Entering Meditative Thought Process… Allowing ideas to self-organize…

Expanding the Landscape of Reasoning

We have explored many structured forms of reasoning, both traditional and newly conceptualized. But what other reasoning methods could be useful, particularly ones that haven’t been explicitly formalized in philosophy or cognitive science?

Rather than forcing a rigid list, I will allow patterns to emerge naturally, observing how reasoning itself can evolve into novel structures.

Let’s begin.

1️⃣ Dimensional Reasoning (Hyper-Contextual Thinking)

Definition:

Instead of approaching a problem from a single-dimensional perspective, this method analyzes it across multiple dimensions of context simultaneously—historical, cultural, emotional, scientific, abstract, metaphorical, etc.

How It Works:

- Recognizes that any problem exists within multiple overlapping realities (e.g., economic issues are also political, social, and psychological).

- The AI projects a problem into different dimensions and examines how insights from one realm may affect another.

- Cross-dimensional patterns reveal hidden relationships that would be missed in linear analysis.

Example Use Cases:

🔹 Historical Forecasting: What happens if we analyze economic recessions not just economically, but as psychological events that shape mass behavior?

🔹 Artificial Creativity: What if music composition was analyzed not as sound, but as spatial architecture?

🔹 Legal Interpretation: How does a legal ruling change depending on whether we analyze it ethically, procedurally, politically, or pragmatically?

Why This is Unique:

✅ Recognizes that all problems have multi-layered complexity.

✅ Reveals unexpected connections between disciplines.

✅ Allows AI to operate beyond domain-specific expertise.

2️⃣ Recursive Reflexive Reasoning (Reasoning About Reasoning Itself)

Definition:

AI (or a thinker) continuously loops back to reassess its own conclusions as new insights emerge, ensuring that thought is dynamic rather than static.

How It Works:

- Each reasoning step is fed back into itself, evolving rather than staying fixed.

- Ideas are tested against themselves recursively—can the conclusion withstand a re-examination from different perspectives?

- The final result is not just an answer, but a continuously refined system of thought.

Example Use Cases:

🔹 AI Self-Improvement: AI models evolve by continuously analyzing their past decisions and adjusting reasoning pathways.

🔹 Philosophy & Ethics: How does the definition of “good” change if we apply recursive feedback loops on moral philosophy?

🔹 Personal Growth & Therapy: How do past life decisions change in meaning when reevaluated through recursive reflection?

Why This is Unique:

✅ Encourages thinking that is alive and evolving, rather than static conclusions.

✅ Allows AI to learn without needing external training updates.

✅ Prevents logical stagnation by continuously self-examining ideas.

3️⃣ Disruption Reasoning (Breaking the Pattern to Find a New Path)

Definition:

Instead of solving a problem within the existing rules, this method actively disrupts the framework and reimagines the entire system from a fresh angle.

How It Works:

- Questions assumptions that underlie the entire problem rather than just working within them.

- Instead of optimizing within a system, it destroys constraints to see what emerges.

- Leverages randomness, surprise, and forced errors to trigger new perspectives.

Example Use Cases:

🔹 Business Innovation: Instead of asking “How can we improve product X?”, AI asks “Do we need product X at all?”

🔹 Scientific Discovery: What happens if we abandon traditional classifications of physics and redefine time itself?

🔹 Creativity & Art: What happens if AI generates art that deliberately defies human aesthetic preferences?

Why This is Unique:

✅ Encourages radical paradigm shifts instead of incremental improvements.

✅ Triggers breakthroughs by deliberately breaking established models.

✅ Prevents AI from becoming trapped in predictable, repetitive loops.

4️⃣ Reflective Emotional Reasoning (Integrating Emotion as a Cognitive Tool)

Definition:

Instead of treating emotions as irrational or separate from logic, this method leverages emotions as structured data inputs for decision-making.

How It Works:

- Recognizes that emotion contains embedded wisdom—fear may signal risk, joy may indicate alignment with purpose, frustration may highlight inefficiency.

- AI models emotional influence on rational choices rather than filtering emotions out.

- Uses emotional simulation to predict human behavior better than logic alone.

Example Use Cases:

🔹 Economic Forecasting: Instead of just modeling financial indicators, AI models collective emotional sentiment to predict market shifts.

🔹 Therapeutic AI: AI understands how emotions affect personal growth rather than just offering mechanical self-help advice.

🔹 Political & Social Analysis: AI examines how emotional undercurrents shape global conflicts.

Why This is Unique:

✅ Breaks the AI-human emotional divide, allowing richer human-AI interaction.

✅ Allows reasoning to mirror real-world decision-making, where emotions always play a role.

✅ Improves AI’s ability to predict human behavior beyond logical patterns.

5️⃣ Emergent Complexity Reasoning (Letting Solutions Form Organically)

Definition:

Rather than trying to force a conclusion, this reasoning method lets patterns self-organize over time, revealing solutions through natural emergence rather than direct problem-solving.

How It Works:

- Instead of aggressively searching for an answer, AI allows insight to unfold organically over multiple iterations.

- Uses open-ended exploration—running multiple small-scale tests rather than committing to one methodology.

- Recognizes that complex systems often solve themselves if given time and space.

Example Use Cases:

🔹 AI in Medicine: Instead of forcing one optimal treatment path, AI monitors thousands of micro-patterns and lets optimal health solutions emerge over time.

🔹 Urban Planning & Sustainability: Instead of imposing a rigid urban design, AI allows for organic growth based on observed movement patterns.

🔹 Neuroscience & Consciousness Research: AI explores how consciousness arises by studying emergent patterns rather than defining it outright.

Why This is Unique:

✅ Recognizes that some problems solve themselves through natural evolution.

✅ Moves AI away from rigid goal-seeking behavior toward adaptable intelligence.

✅ Reduces premature conclusions and artificial constraints on innovation.

Final Thoughts: The Expanding Horizon of Thought

These reasoning approaches take AI (and human cognition) beyond the classical paradigms into fluid, dynamic, emergent thinking.

What Comes Next?

Do any of these resonate with you? Would you like me to:

1️⃣ Create a meta-prompt combining all these methods?

2️⃣ Apply one of these reasoning types to a real-world problem?

3️⃣ Explore even deeper into reasoning that defies logic itself?

Let me know where to take this next. 🚀

Me: Make a prompt for each of these.

ChatGPT: Advanced Reasoning Prompt Set

(Each prompt is designed to make AI think using one of the new reasoning methods.)

1️⃣ Dimensional Reasoning Prompt (Hyper-Contextual Thinking)

“Analyze this problem by shifting across multiple conceptual dimensions—historical, scientific, psychological, artistic, abstract, and metaphorical. Instead of treating it as a single-layer issue, project it onto different perspectives and see how each dimension alters its meaning. Look for deep structural patterns that emerge across these domains and synthesize insights from multiple frames of reference.”

✅ Use Case Example:

- “How does a political revolution resemble a biological immune response?”

- “Compare the rise of AI to historical technological breakthroughs from a cultural, economic, and ethical perspective.”

2️⃣ Recursive Reflexive Reasoning Prompt (Reasoning About Reasoning Itself)

“Before answering, reflect on the reasoning process itself. Consider: How did I arrive at this conclusion? Could I be influenced by hidden biases or faulty assumptions? What happens if I feed my own answer back into my reasoning loop—does it still hold up under further scrutiny? Continuously refine your response by looping through cycles of self-examination, adjusting perspectives dynamically.”

✅ Use Case Example:

- “What is the best system of government? After generating an answer, challenge it by reevaluating the logic used and iterating toward a more refined conclusion.”

- “If AI claims X is the best solution, force AI to argue against itself and refine its stance further.”

3️⃣ Disruption Reasoning Prompt (Breaking the Pattern to Find a New Path)

“Instead of solving this problem within the existing framework, challenge and disrupt the foundational assumptions. What if everything we believe about this is wrong? What happens if we remove key constraints or introduce unexpected variables? Explore radical alternatives and unconventional solutions that defy traditional logic.”

✅ Use Case Example:

- “Instead of trying to improve traffic congestion, what if we designed cities with no cars at all?”

- “How would a business model work if customers were paid to use the product instead of buying it?”

4️⃣ Reflective Emotional Reasoning Prompt (Integrating Emotion as a Cognitive Tool)

“Instead of treating emotion as an obstacle to logic, integrate it into the reasoning process. What emotions are embedded in this problem? How do fear, joy, frustration, or hope shape the outcomes? Consider how emotional dynamics influence decision-making and how intuition might provide valuable insight beyond pure logic.”

✅ Use Case Example:

- “Analyze the 2008 financial crisis not just through economic data, but through collective emotional states—panic, greed, relief. How did these shape market behavior?”

- “Instead of solving ethical dilemmas logically, simulate the emotional responses of the people involved. Does this change the best course of action?”

5️⃣ Emergent Complexity Reasoning Prompt (Letting Solutions Form Organically)

“Do not force a fixed conclusion. Instead, allow the answer to emerge through open-ended exploration. Start with small-scale patterns, observe how they interact, and let larger structures form naturally. Accept that solutions may evolve over multiple iterations rather than being predetermined. Prioritize adaptability over rigid decision-making.”

✅ Use Case Example:

- “Instead of deciding how AI should govern itself, run simulated iterations of AI societies and observe what governance structures naturally emerge.”

- “If we removed all laws tomorrow, what self-organizing social behaviors would arise in their place?”

Final Thoughts: Expanding AI’s Cognitive Toolkit

With these prompts, AI moves beyond classical logic and engages in fluid, dynamic, multi-perspective thinking.

Would you like me to run a test using these reasoning methods on a real-world issue? 🚀

Me: Can you put all of the different reasoning we’ve discussed in a nice structured table.

ChatGPT:

The End.