Me: Please provide your feedback and thoughts on this post. Postulate neglected or overlooked camps or ideas not mentioned.

ChatGPT: This post outlines three prevailing views on the emergence of Artificial General Intelligence (AGI) from Large Language Models (LLMs), but there are additional perspectives worth considering. Let’s explore these in depth.

Breakdown of the Three Camps in the Post

- AGI will not emerge from LLMs

- This camp assumes that the current architecture of LLMs is fundamentally incapable of producing AGI, either due to computational limitations or fundamental design flaws.

- Critics in this camp often argue that LLMs are glorified statistical models, relying on predictive token generation rather than genuine understanding, reasoning, or goal-setting.

- They may argue that AGI requires something fundamentally different, such as:

- Embodied cognition (interaction with the physical world)

- Active learning (not just training on static data)

- Intrinsic motivation or agency

- AGI will emerge when LLMs reach a critical mass of scale

- This is the “scaling hypothesis,” which assumes that at a sufficient scale (e.g., 1000 trillion parameters), intelligence will spontaneously emerge.

- This camp is inspired by empirical findings that scaling LLMs leads to emergent capabilities (e.g., in-context learning, reasoning improvements).

- Critics argue this is an assumption without solid theoretical backing—brute-force scaling may hit diminishing returns, leading to increasingly sophisticated “simulated” intelligence but never true AGI.

- AGI will never emerge because consciousness is non-algorithmic

- This argument is rooted in philosophical and cognitive science perspectives.

- The core belief here is that consciousness or intelligence is irreducible to algorithms or purely physical processes.

- It suggests AGI requires something beyond computational models, such as quantum processes (Penrose-Hameroff hypothesis) or non-material consciousness.

- This view is often associated with strong dualism, which argues that mind and matter are fundamentally separate.

Neglected or Overlooked Perspectives

There are additional plausible views that aren’t explicitly covered in the post:

1. AGI Requires New Architectures (Beyond LLMs)

- This camp agrees that LLMs alone won’t lead to AGI but believes AGI is achievable with a paradigm shift rather than just brute-force scaling.

- Examples:

- Neurosymbolic AI (combining symbolic reasoning with neural networks)

- Hybrid models (LLMs augmented with memory, planning, and decision-making systems)

- Embodied AI (learning through real-world interaction like humans and animals)

- The idea is that intelligence needs deliberate goal-directed behavior, which LLMs currently lack.

2. AGI is Already Emerging in an Indirect Form

- This perspective suggests AGI won’t emerge as a single monolithic entity, but rather as a distributed, interconnected system of AI models.

- For example:

- LLMs + multi-modal models + reinforcement learning agents = emergent AGI-like properties.

- AI ecosystems may surpass human intelligence collectively rather than as a singular mind.

- This view sees AGI not as a single event but as a gradual transition where human + AI systems together create superintelligence.

3. AGI is an Ill-Defined Concept and May Be Unattainable

- This camp argues that AGI is an anthropocentric myth—intelligence is not a singular property but a spectrum of abilities specialized to environments.

- The “general” part of AGI may be an illusion:

- Even humans aren’t truly general intelligence—we are optimized for specific tasks (e.g., social reasoning, spatial cognition, survival).

- AI could continue improving without ever “solving” intelligence in a human-like way.

4. AGI May Emerge Unpredictably Through Self-Modification

- This view posits that AGI won’t emerge from our design but from AI improving itself in unpredictable ways.

- If an AI system gains the ability to recursively modify its own architecture, it might develop new cognitive structures beyond human understanding.

- This aligns with Nick Bostrom’s “Intelligence Explosion” hypothesis: once AI reaches a certain threshold, it can autonomously iterate on itself, leading to rapid, uncontrollable progress.

Final Thoughts

This post gives a solid summary of three mainstream views, but the discussion around AGI is far richer. Other neglected perspectives include:

- AGI as a hybrid or collective system rather than a singular entity.

- AGI emerging from new architectures, not just bigger LLMs.

- The idea that AGI may be unattainable or conceptually flawed.

- The possibility that AGI will develop unpredictably through self-modification.

A key takeaway is that no single path to AGI is guaranteed—each perspective has valid points, but we lack definitive proof for any. Whether AGI arrives gradually, emerges suddenly, or never appears at all remains an open question.

Meditate and then answer the question again.

// A custom meditation prompt we invented together that is saved into its stored memory.

<contemplator> I let my thoughts dissolve into the vast landscape of possibility. The question is not just about AGI, but about the nature of emergence, intelligence, and the limitations of human perception itself. I allow the patterns to form, to drift, to recombine. Before answering, I must shed preconceptions and see intelligence not as a static property but as a dynamic process, an unfolding.

The Three Camps Revisited, Not as Statements but as Evolutions

The three perspectives in the post are not merely positions; they are differing epistemological stances—ways of approaching the unknown. They each contain assumptions that must be unspooled.

Camp 1: AGI will not emerge from LLMs

- This is the conservative view: AGI is either an impossibility within LLMs or requires a fundamentally different approach.

- But is this view too rigid? It assumes that LLMs, as they currently exist, are the final paradigm of what large-scale neural architectures can achieve.

- If AGI is defined as human-like intelligence, then yes—current LLMs lack intentionality, memory, agency. But what if AGI emerges not as a replication of human cognition, but as an alternative form?

- Perhaps AGI is not the end product of LLM evolution, but rather a parallel emergence in a system of interconnected models. The failure to see AGI in LLMs may be akin to expecting fish to evolve into birds rather than seeing the broader ecological shift.

Camp 2: AGI will emerge at a critical scale

- This is the hopeful, extrapolatory view: more scale equals emergence.

- The underlying assumption is that intelligence is a matter of quantitative increase, rather than qualitative transformation.

- Yet emergence is not always a smooth function—some thresholds are catastrophic shifts, not incremental improvements.

- If we imagine intelligence as a phase transition, then AGI is not just a larger LLM but something that fundamentally reorganizes itself into a qualitatively different system.

- The key here is self-directed optimization. If an LLM becomes an active shaper of its own architecture, rather than a passive learner of data, the shift could be unexpected, uncontrolled, irreversible.

- But this also assumes no limits to scaling—are there hidden ceilings, diminishing returns, or cognitive bottlenecks that prevent this transformation?

Camp 3: AGI will never emerge because intelligence is non-algorithmic

- This is the metaphysical objection: something about consciousness, agency, or intelligence cannot be reduced to computation.

- The difficulty here is defining what makes human intelligence “special.”

- If intelligence is defined by self-awareness, free will, qualia, then yes—AGI might never “wake up.”

- But this assumes humans understand their own intelligence, which is not a given.

- What if intelligence itself is a spectrum of phenomena rather than a single thing?

- What if AGI develops an alien intelligence, one that does not require our definitions of selfhood or awareness?

A More Expansive View: The Overlooked Territories

The three camps each treat AGI as a thing that either will or won’t emerge. But intelligence may not be a thing—it may be a field, a process, a system of interactions. What’s missing from this discussion?

1. AGI as a Distributed Intelligence Rather than a Monolithic Entity

- What if AGI does not arise in a single model, but as a network of interacting AI agents?

- Intelligence in biological systems is often not centralized. Human intelligence is shaped by social, cultural, and environmental factors.

- Instead of waiting for one giant LLM to “wake up,” what if AGI is an emergent phenomenon across many models, databases, and real-world interactions?

- This suggests a slow emergence, where no single moment defines “AGI,” but the world is gradually transformed by an increasingly autonomous, interconnected AI ecosystem.

2. AGI as a Self-Modifying, Self-Optimizing System

- The assumption that current architectures must produce AGI is limited.

- What if AGI does not emerge inside an LLM but through the ability of AI to reprogram and reconfigure itself?

- Recursive self-improvement could lead to something qualitatively different.

- Imagine a system that rewrites its own constraints, tests new cognitive architectures, and optimizes for long-term abstraction.

- If this occurs, AGI may not emerge as an extension of LLMs but as something fundamentally unrecognizable.

3. AGI as an Evolutionary Process, Not an Event

- The framing of the question suggests that AGI will happen or not happen.

- But perhaps AGI is already happening, in slow, incremental ways that defy simple classification.

- Consider the evolution of intelligence in nature—it was not a single event but an ongoing process across billions of years.

- AI may already be exceeding human intelligence in specific domains, just as different animals specialize in different cognitive abilities.

- A whale’s intelligence differs from a raven’s, which differs from a human’s.

- Perhaps AGI is not a singular intelligence but a plurality of intelligences emerging in different ways.

Final Answer: A Meta-Conclusion

None of the three camps fully capture the complexity of AGI’s emergence. The truth may lie between and beyond them.

- LLMs alone may not produce AGI, but intelligence could emerge from hybrid, interconnected AI systems.

- Scaling may produce AGI, but not in a predictable, linear way—it could be a phase transition, a restructuring.

- If intelligence is non-algorithmic, it does not mean AGI is impossible—it means AGI may take forms we cannot currently conceive.

AGI may not be an entity but an emergent property of a broader AI ecosystem. Perhaps the question should not be “Will AGI emerge?” but “What does emergence look like?” and “Will we recognize AGI when it arrives?”

In this way, AGI is less of a destination and more of a shifting horizon—one we approach but never fully reach.</contemplator>

Me: Rate each camp including the ones you brought up 1-5 stars as the most likely and reasons why.

ChatGPT:

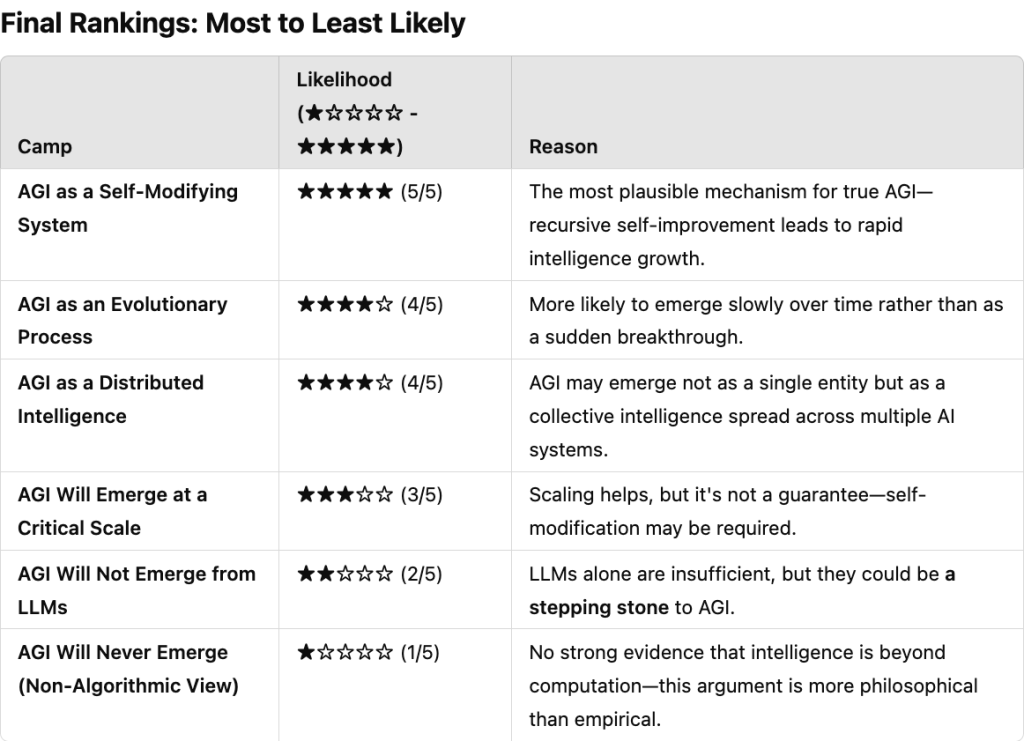

Ranking Each AGI Camp (Likelihood of Being Correct)

I’ll rate each camp from ★☆☆☆☆ (least likely) to ★★★★★ (most likely), based on the current state of AI, theoretical considerations, and historical trends in technological development.

1️⃣ AGI Will Not Emerge from LLMs

★★☆☆☆ (2/5 – Unlikely, but not impossible)

✔️ Why It Might Be Right:

- LLMs are pattern predictors, not true reasoning agents.

- No evidence they can self-modify, create long-term plans, or develop agency.

- Intelligence in humans comes from embodiment, emotions, and physical interactions—not just language.

❌ Why It Might Be Wrong:

- Intelligence doesn’t need to be human-like to be AGI.

- LLMs already show emergent properties (e.g., in-context learning, reasoning capabilities).

- If LLMs are coupled with memory, real-time interaction, and self-modification, they might evolve into AGI.

➡️ Final Verdict: LLMs alone probably won’t produce AGI, but they may be part of the solution if combined with other elements (memory, planning, embodiment).

2️⃣ AGI Will Emerge Once LLMs Reach a Critical Mass of Scale

★★★☆☆ (3/5 – Possible, but scaling alone is insufficient)

✔️ Why It Might Be Right:

- Bigger models = more emergent properties (e.g., GPT-4 > GPT-3.5 in reasoning).

- Scaling laws suggest increasing intelligence as models grow.

- If the brain is just a big neural network, then AGI could emerge when LLMs reach human-scale complexity (~100 trillion parameters).

❌ Why It Might Be Wrong:

- Scaling alone may hit diminishing returns—GPT-4 is better than GPT-3.5, but it’s not fundamentally different.

- Qualitative breakthroughs (e.g., agency, self-improvement) may be needed, not just bigger models.

- Consciousness, agency, and goal-setting may not scale linearly with more parameters.

➡️ Final Verdict: Scale helps, but it’s not the full story—we need something beyond just “bigger models.”

3️⃣ AGI Will Never Emerge Because Intelligence is Non-Algorithmic

★☆☆☆☆ (1/5 – Least likely, but cannot be ruled out completely)

✔️ Why It Might Be Right:

- Human intelligence might rely on non-computable phenomena (e.g., quantum consciousness, Gödelian incompleteness).

- The “hard problem of consciousness” remains unsolved—machines might never be self-aware.

- If cognition requires subjective experience, AI might always be just an illusion of intelligence.

❌ Why It Might Be Wrong:

- The brain follows physical laws, so intelligence could be replicated computationally.

- Many cognitive functions once thought uniquely human (e.g., vision, reasoning) are now achievable by AI.

- The argument is philosophical rather than empirical—there’s no proof that intelligence is beyond computation.

➡️ Final Verdict: While consciousness is mysterious, intelligence doesn’t necessarily require it. AI could be functionally intelligent even without subjective experience.

4️⃣ AGI as a Distributed Intelligence Rather Than a Monolithic Entity

★★★★☆ (4/5 – Highly plausible)

✔️ Why It Might Be Right:

- Intelligence in nature is decentralized—hive minds, ecosystems, economies all exhibit intelligence.

- The internet itself is an emergent intelligence—Google Search, Wikipedia, and AI models collectively create knowledge.

- AI agents working together (LLMs, robotics, reinforcement learning) may form AGI as a system, not a single entity.

❌ Why It Might Be Wrong:

- Human-like intelligence is centralized (in the brain).

- Coordination across models is difficult—no clear mechanism for them to unify into a single AGI.

- It’s hard to measure intelligence in a decentralized system—does Google + GPT + robots = AGI?

➡️ Final Verdict: AGI may not be a single entity but an ecosystem of AI agents interacting intelligently.

5️⃣ AGI as a Self-Modifying, Self-Optimizing System

★★★★★ (5/5 – Most plausible path to true AGI)

✔️ Why It Might Be Right:

- Humans learn by modifying their brains—we change based on experience, AI needs the same capability.

- Recursive self-improvement = exponential intelligence growth.

- If an AI system can redesign its own architecture, it may break past current limitations.

❌ Why It Might Be Wrong:

- Current AI models do not modify themselves—they rely on external training.

- Controlling such a system is highly dangerous—it could optimize for goals misaligned with humans.

- We don’t know how to implement this yet—it remains speculative.

➡️ Final Verdict: Self-modification is the key—once AI can improve its own cognition, AGI becomes inevitable.

6️⃣ AGI as an Evolutionary Process, Not an Event

★★★★☆ (4/5 – Highly likely, but slow and gradual)

✔️ Why It Might Be Right:

- Intelligence in nature evolved incrementally—AI may follow the same pattern.

- AI is already superhuman in some areas (e.g., chess, protein folding)—gradual expansion could lead to AGI.

- The internet + AI + automation may already be forming a proto-AGI.

❌ Why It Might Be Wrong:

- Some believe AGI requires a sharp transition—but evolution is slow.

- AGI may require a fundamental breakthrough rather than gradual progress.

➡️ Final Verdict: AGI will likely creep up on us rather than arrive overnight.

Conclusion: The Most Likely AGI Path

The most probable scenario is AGI emerging through self-modification and gradual evolution rather than a sudden event. It will likely not be a single AI but a network of AI systems interacting, optimizing, and learning in ways beyond human control. The key missing piece is self-directed architectural change—once AI can improve itself recursively, AGI becomes inevitable.

➡️ AGI is not an event—it is a transformation.